Human evals

Set up human evaluators

A guide on how to set up human evaluators.

Although LLMs are powerful, they are not perfect. They can make mistakes, and sometimes the mistakes are hard to detect. Human review is a way to ensure the quality of the LLM output and the accuracy of the AI evaluators.

Set up human evaluators

1

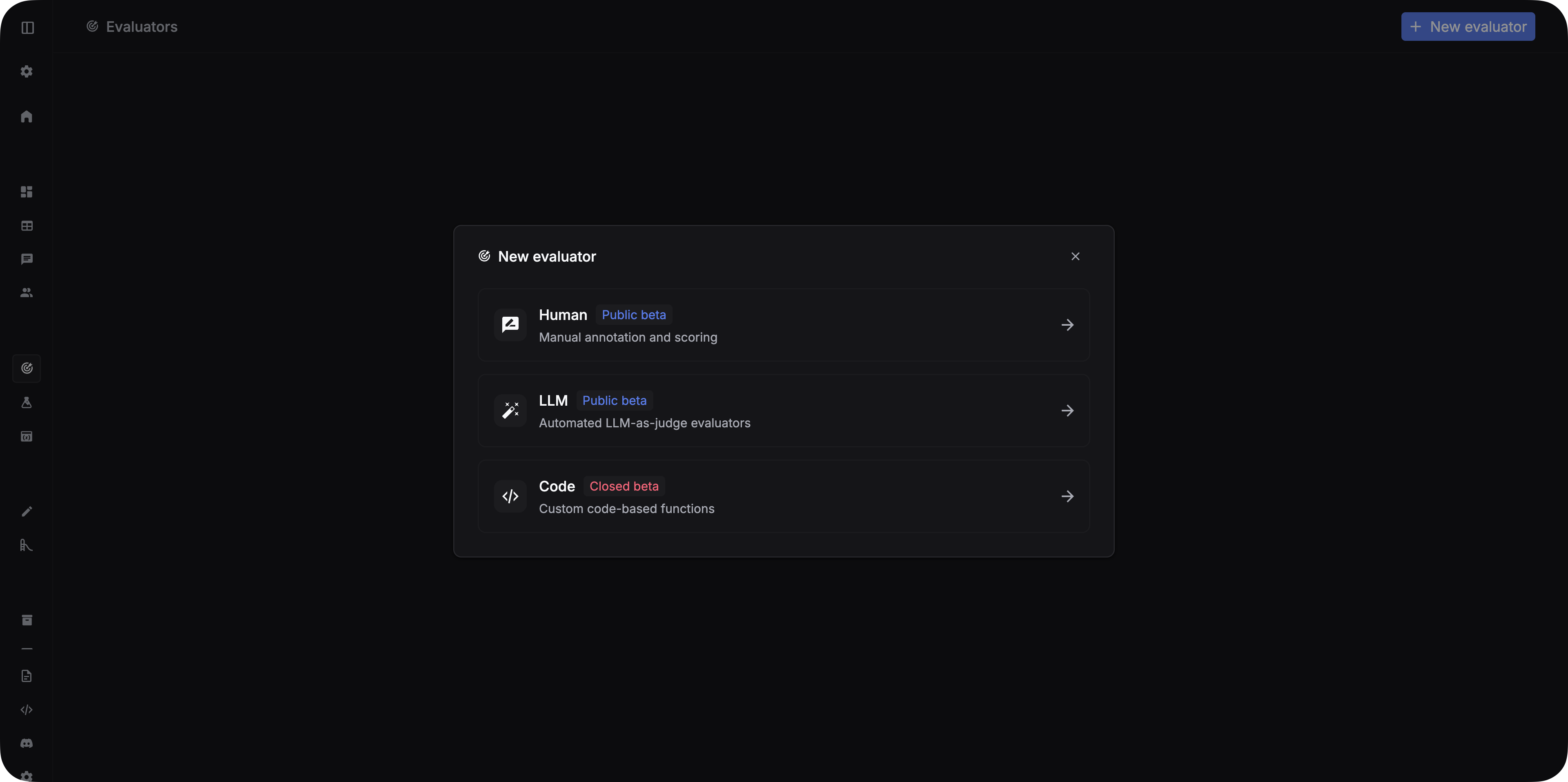

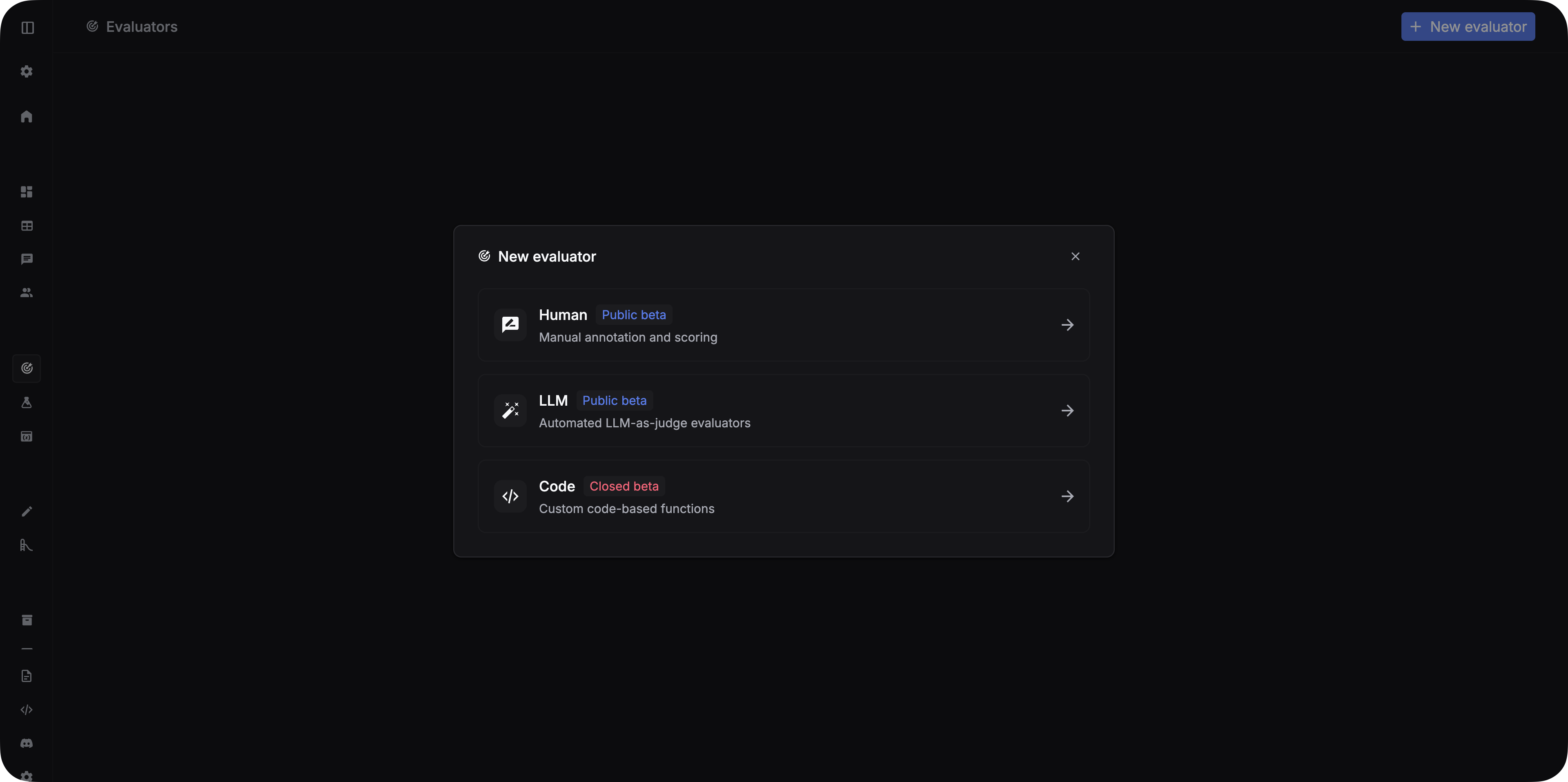

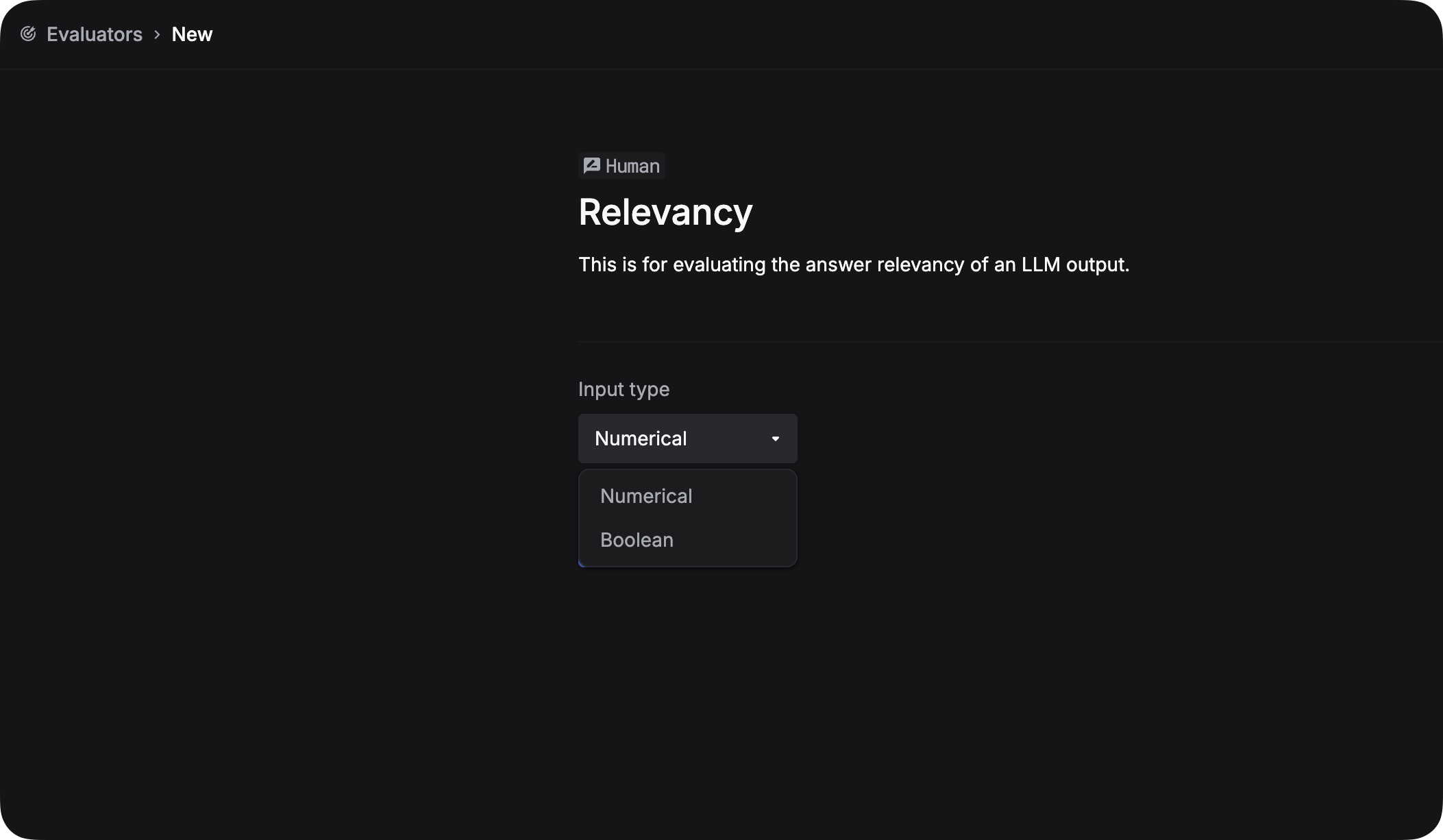

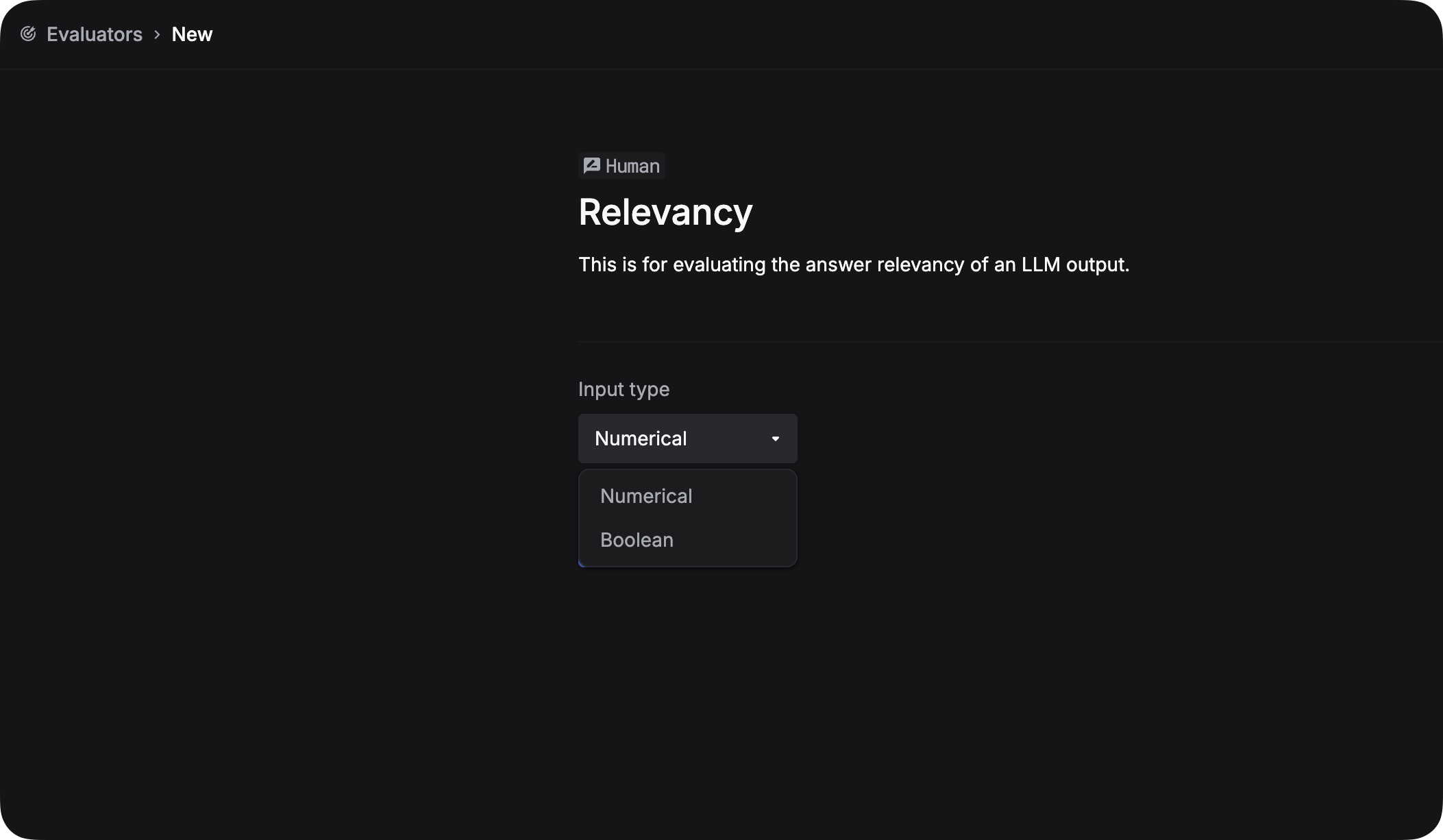

Create a new evaluator

You can set up an evaluator in Evaluators. Click the + New evaluator button, and select Human.

2

Configure the evaluator

Name the evaluator, and add a description. Then you can choose the type of the evaluation metric. We currently support Boolean and Numerical metrics.

3

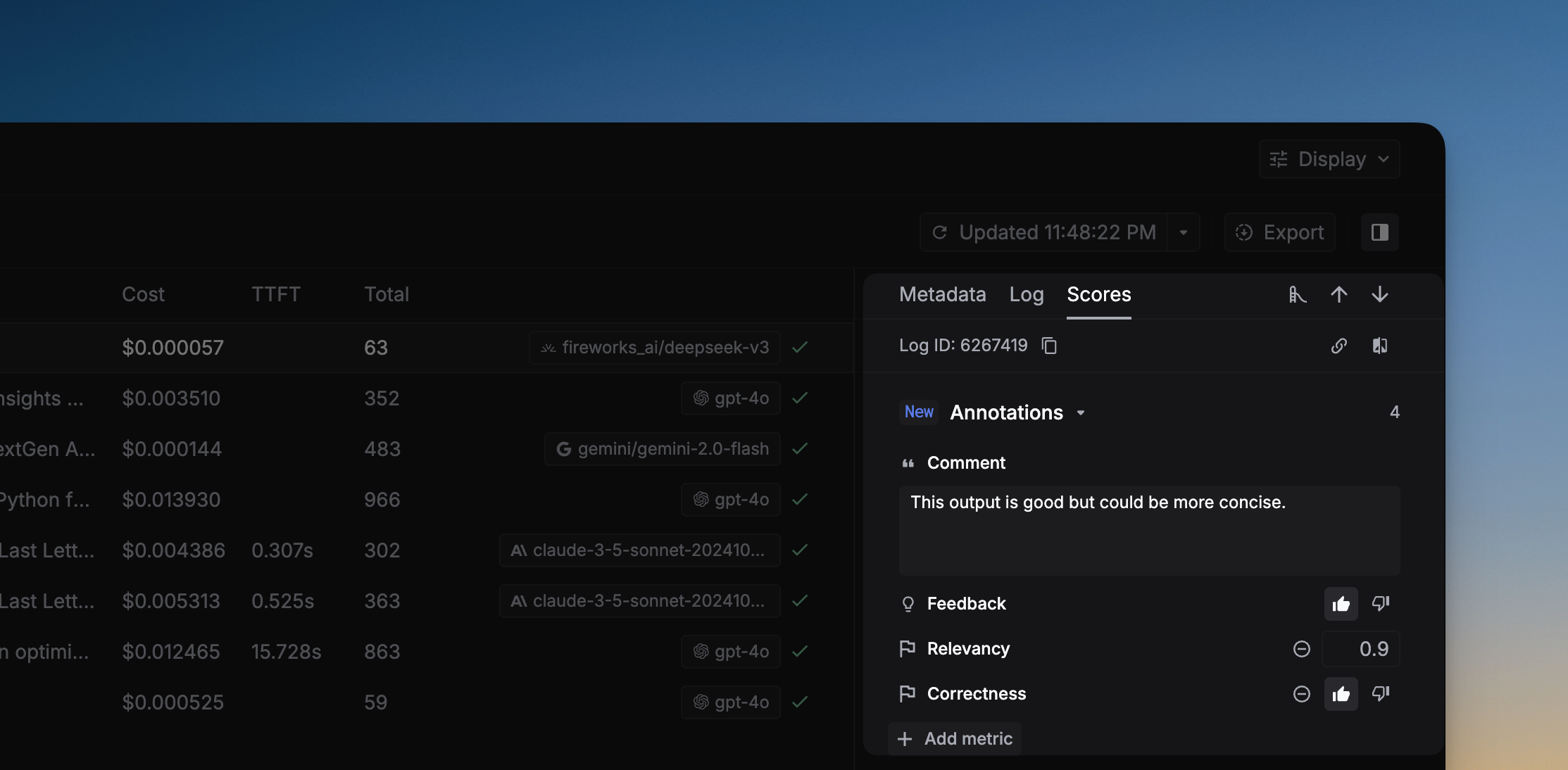

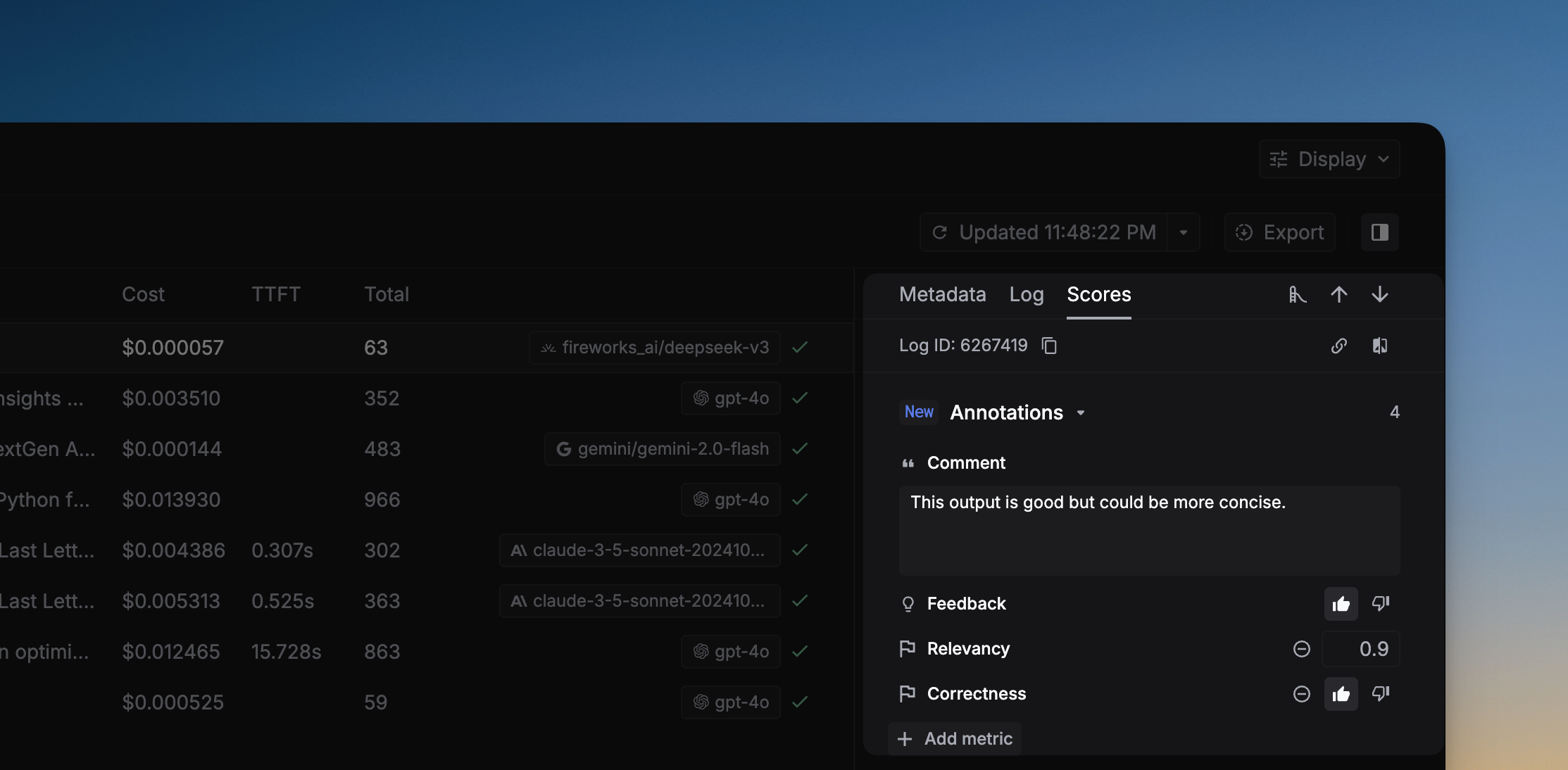

Annotate LLM logs in Logs

You can annotate LLM logs in the side panel Scores ofLogs.