What is Keywords AI?

Keywords AI is a full-stack LLM engineering platform that helps developers and PMs build reliable AI products 10x faster. In a shared workspace, product teams can monitor, optimize, and improve AI performance.Features

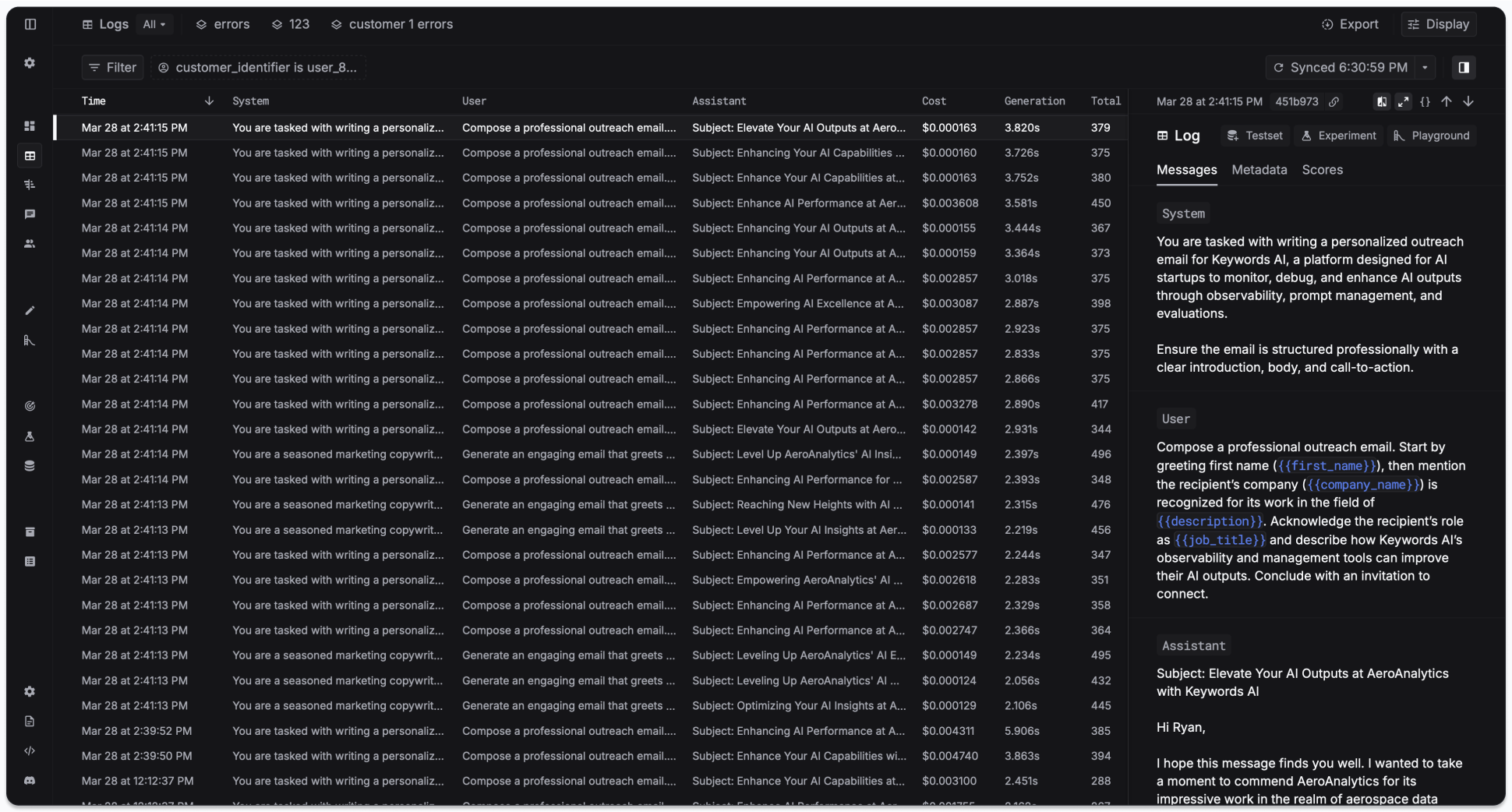

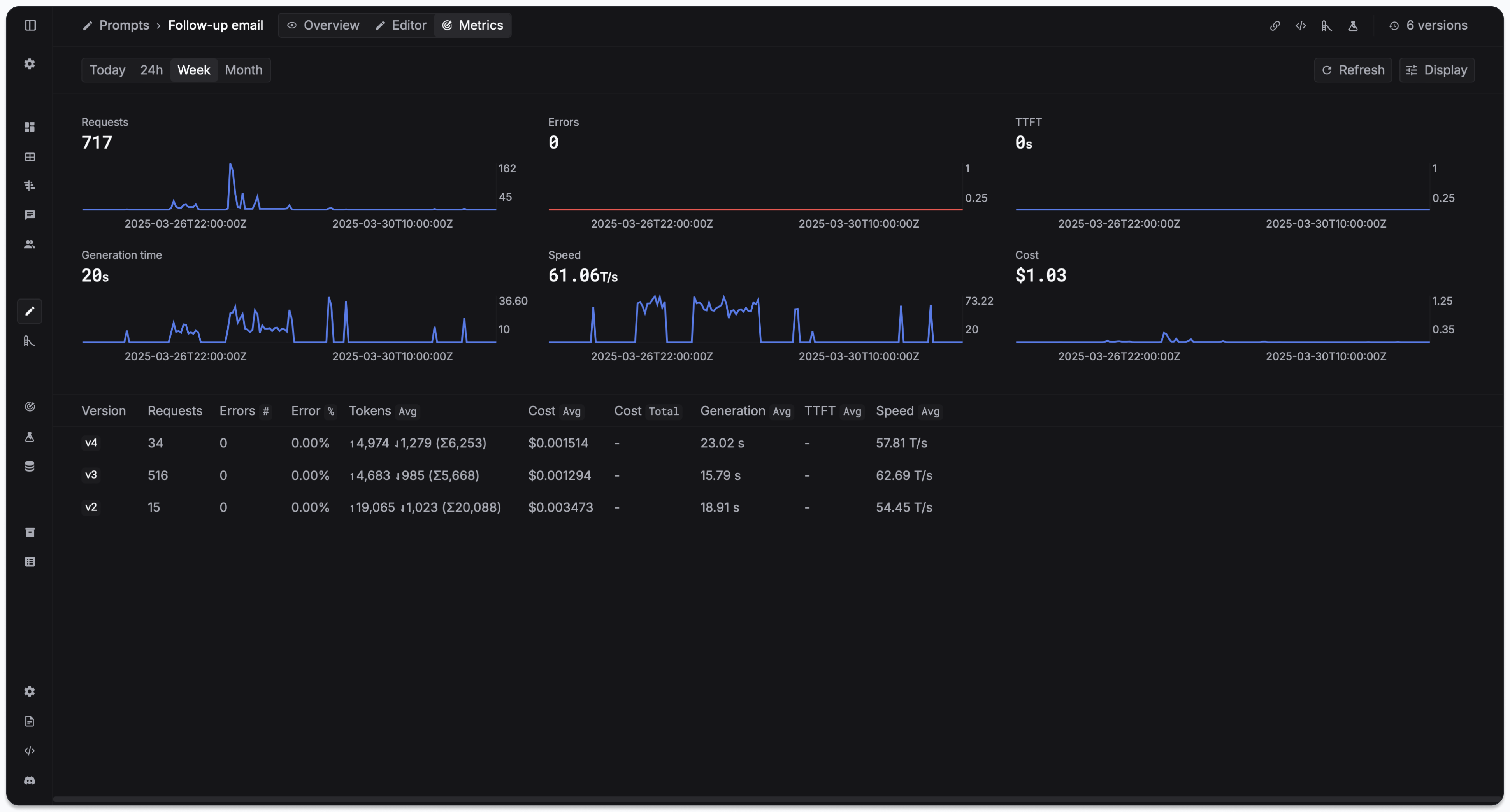

Observability

Monitor your LLM applications with comprehensive visibility into performance, costs, and user interactions.- Monitoring dashboard

- LLM logging

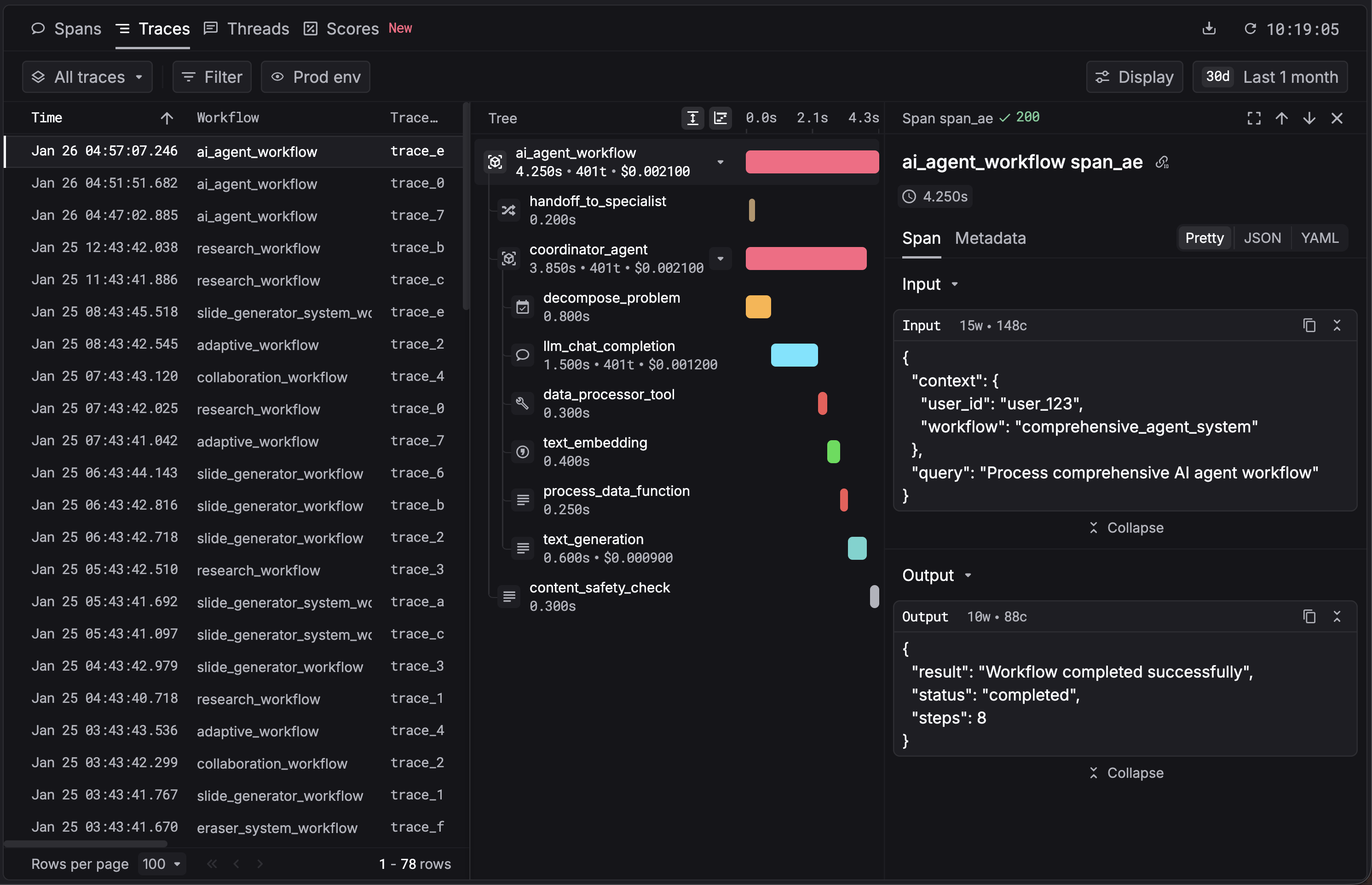

- Agent tracing

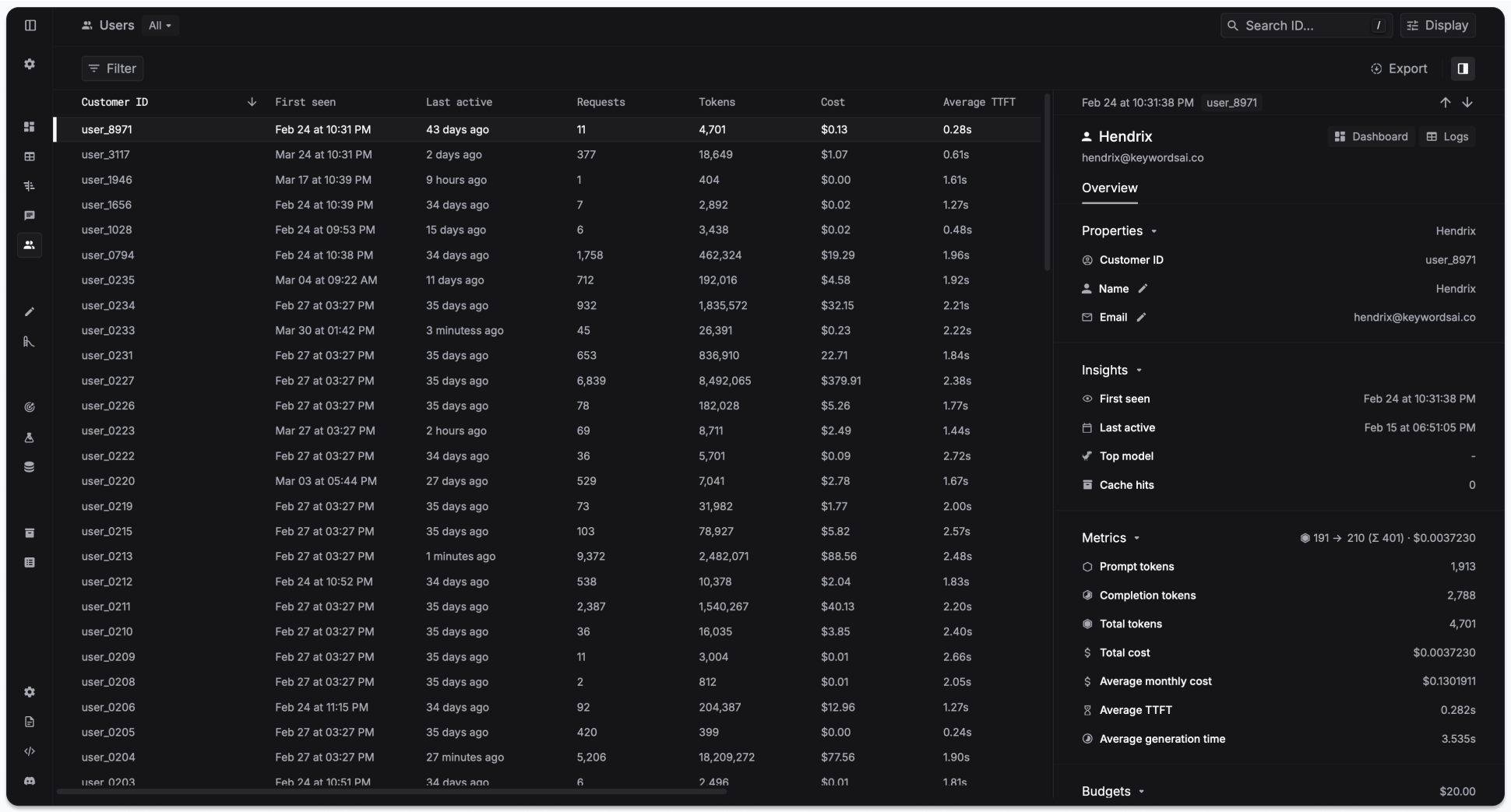

- User analytics

Logging quickstart

Send your existing LLM calls to Keywords AI for observability

Tracing quickstart

Monitor complex agent workflows step-by-step

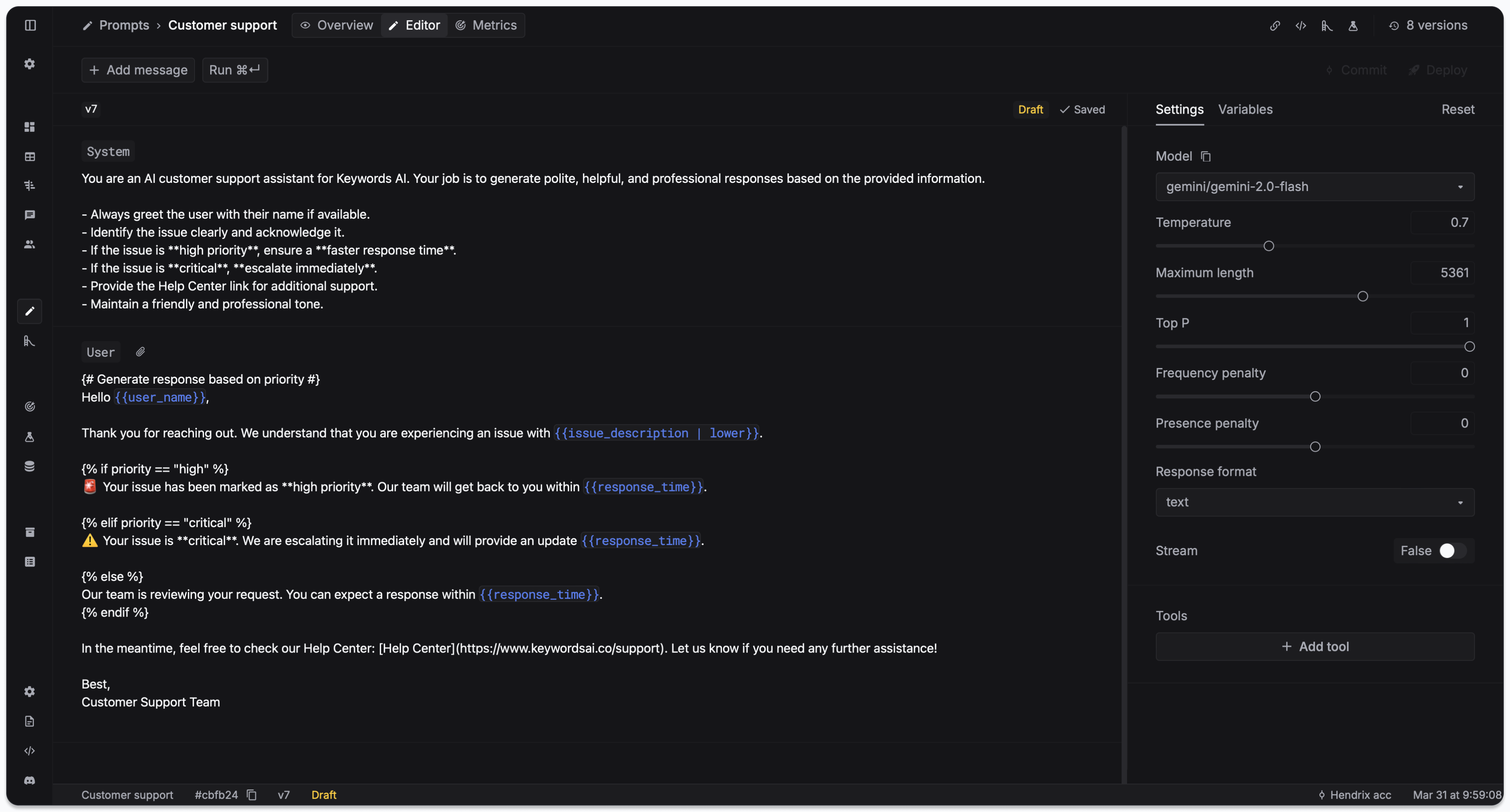

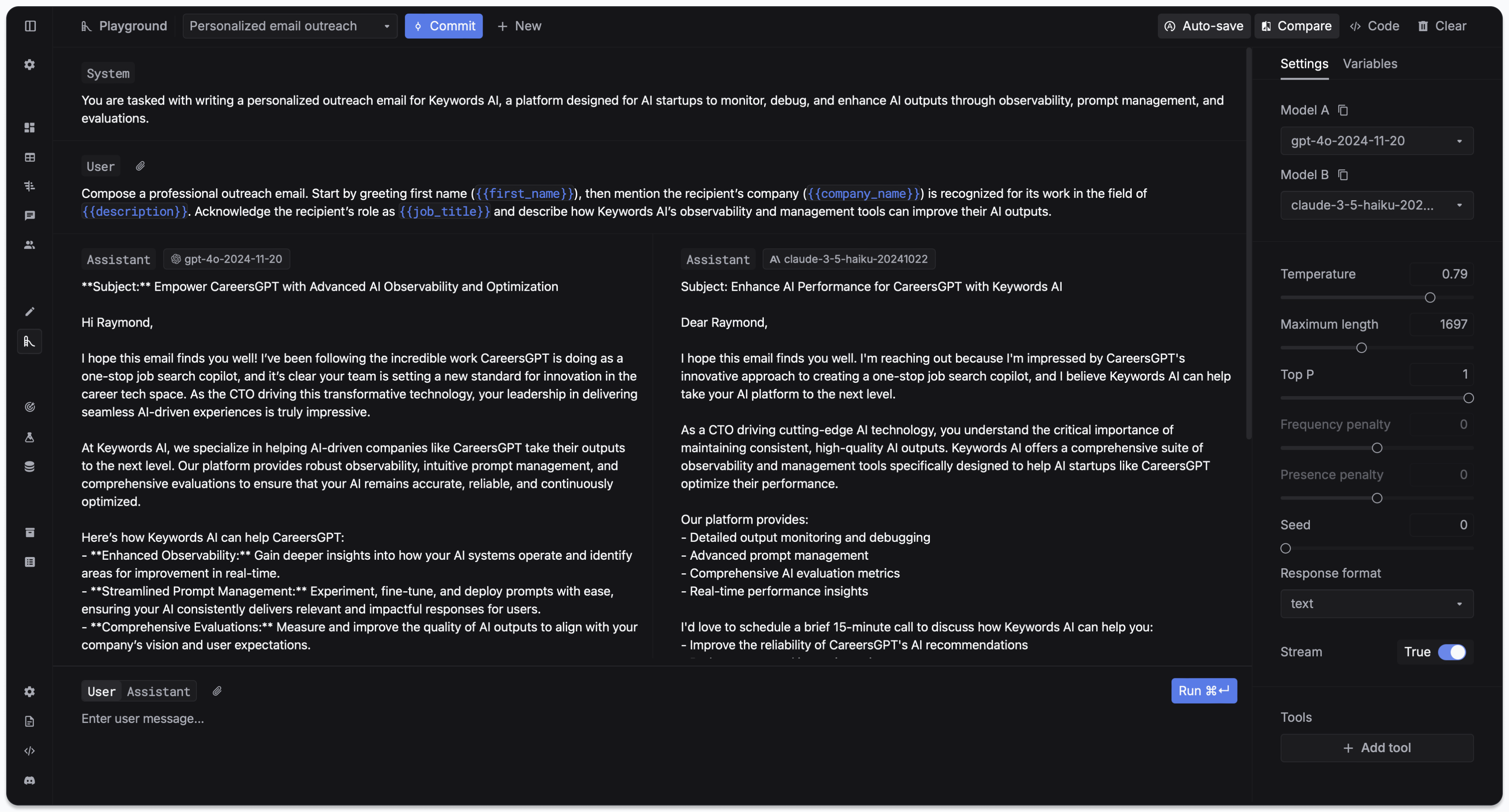

Prompt management

Isolate prompts from code, collaborate with your team, and iterate faster with version control.- Prompt playground

- Prompt editor

- Metrics monitoring

- Version control

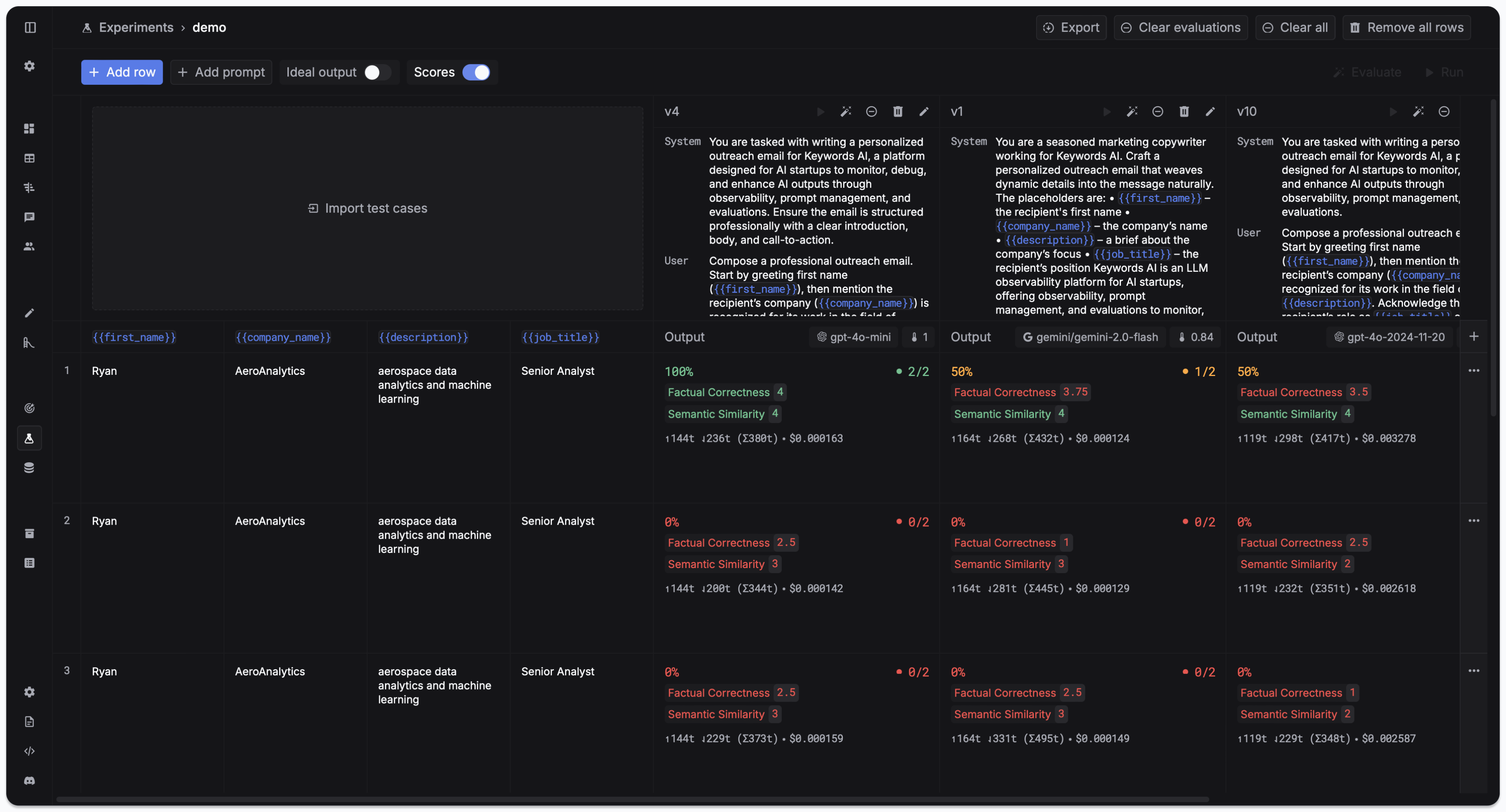

Evaluations

Test your prompts systematically with evaluators, datasets, and experiments.- Experiments

- Evaluators

- Datasets & scores

AI gateway

Interface with 250+ LLMs via one unified API with built-in optimization features.Why Keywords AI?

Keywords AI is the only platform that combines AI Gateway, Observability, Prompt Management, and Evaluations in one unified solution. Unlike other tools that focus on single aspects of LLM development, we provide everything you need to build, monitor, and improve AI applications.Unified platform

No need to integrate multiple tools - all core features in one solution

24/7 support

Dedicated support team available around the clock

Simple setup

Get started in minutes with our managed platform and straightforward integration process

Enterprise ready

SOC 2, GDPR, and HIPAA compliant with enterprise-grade security and scalability built for production environments

Platform comparison

| Feature | Keywords AI | Langfuse | Helicone | Braintrust |

|---|---|---|---|---|

| AI gateway | ✅ | ❌ | ✅ | Limited |

| Observability | ✅ | ✅ | ✅ | ✅ |

| Prompt management | ✅ | ✅ | Limited | ✅ |

| Evaluations | ✅ | ✅ | ❌ | ✅ |

| Team collaboration | ✅ | ✅ | ✅ | ✅ |

| Setup complexity | Simple | Hard | Simple | Medium |

| Hosting | Managed | Open Source | Managed | Enterprise |

| Support service | 24/7 dedicated | GitHub (24-48h) | Contact form | Support center |