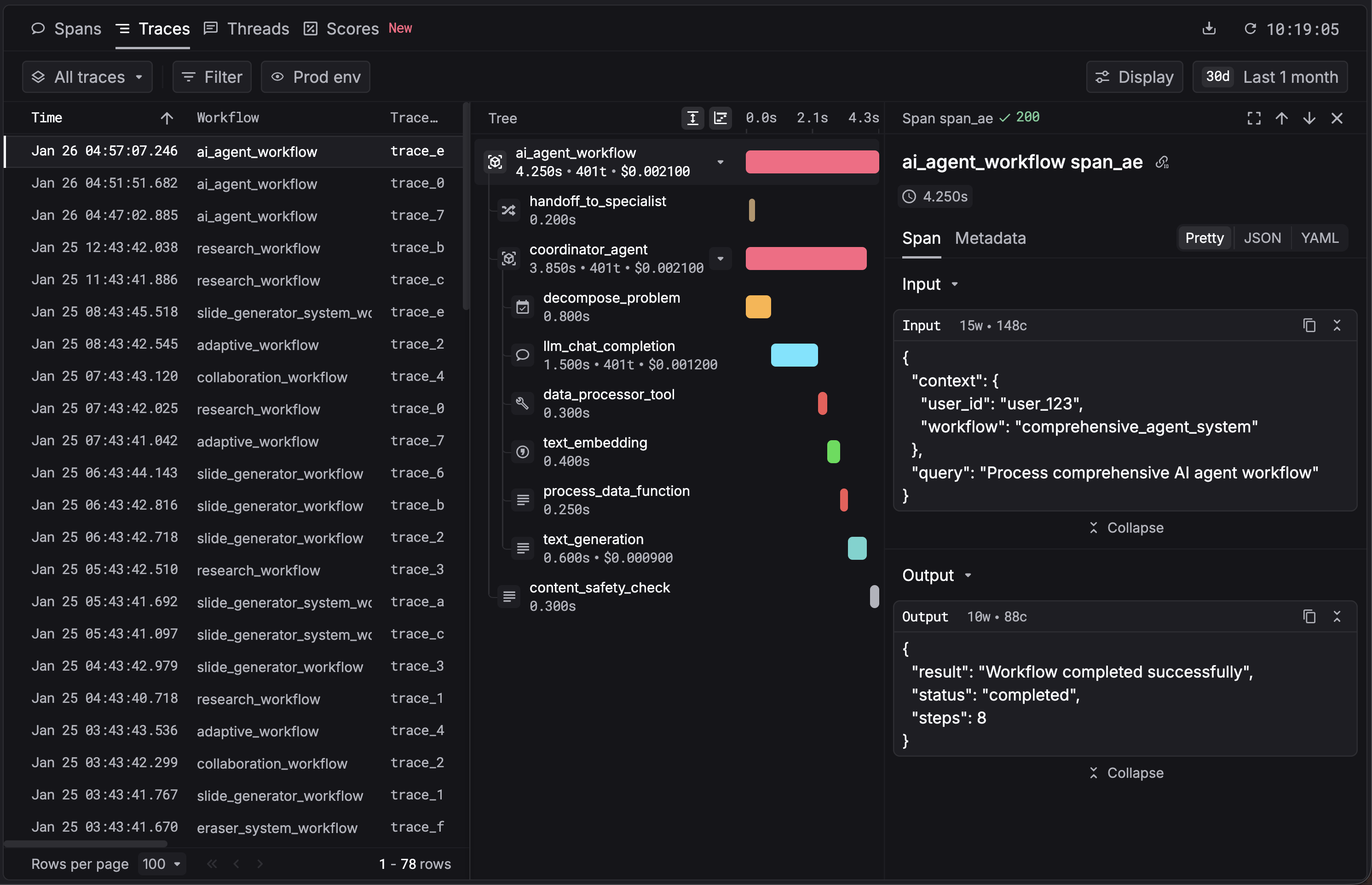

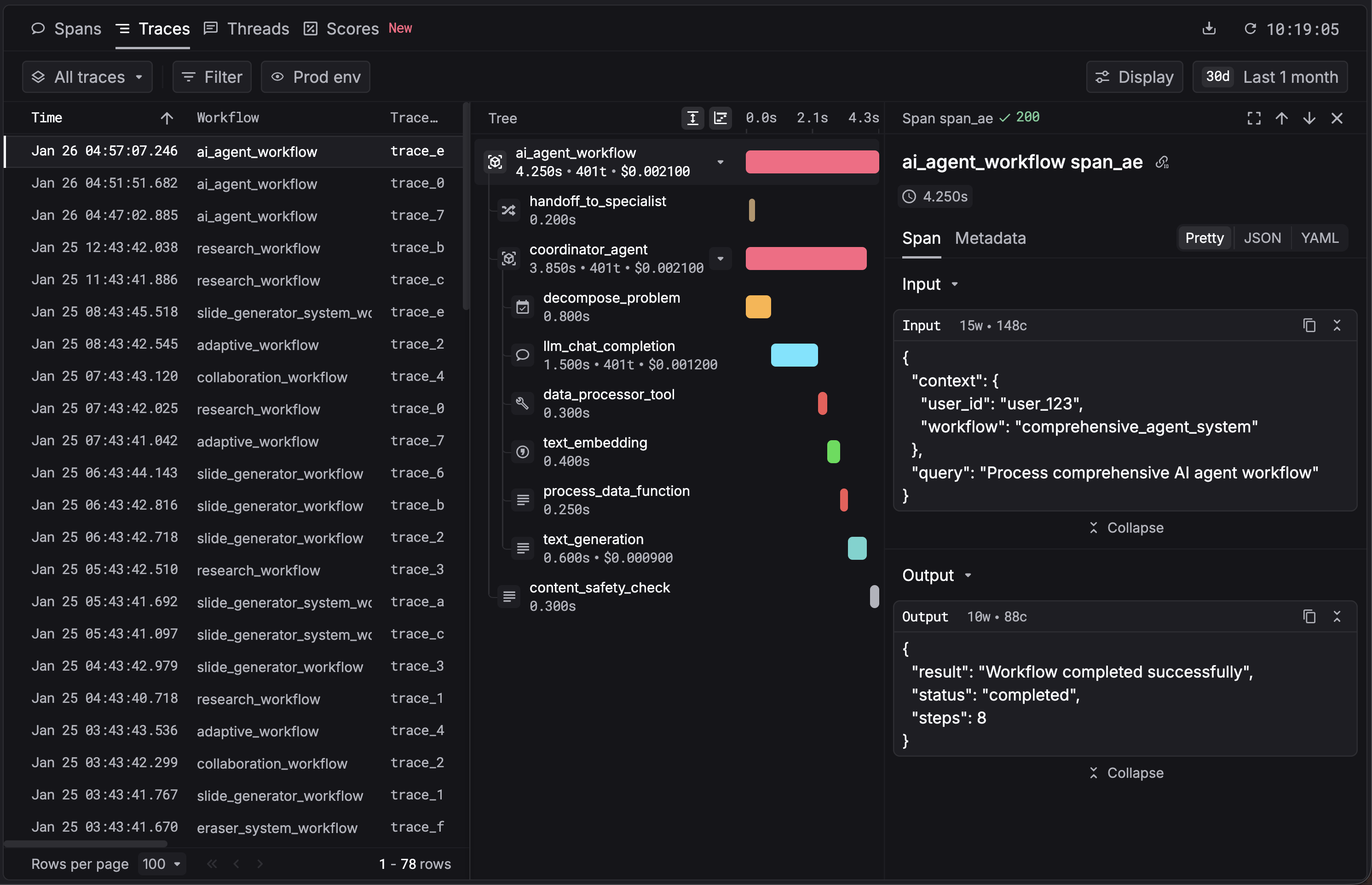

What is traces?

Traces are a chained collection of workflows and tasks. You can use tree views and waterfalls to better track dependencies and latency.

Use agent tracing

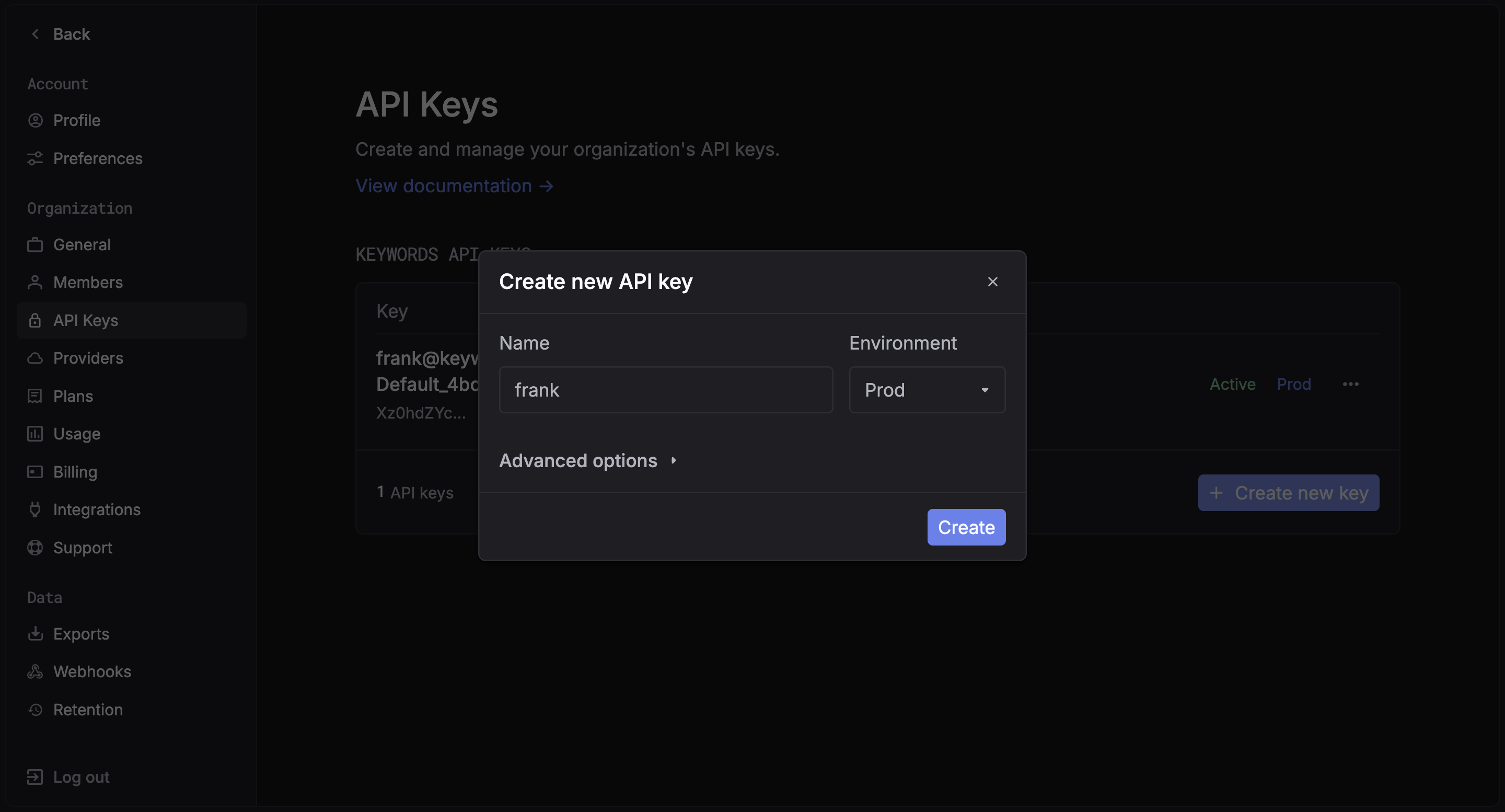

1. Get your Keywords AI API key

After you create an account on Keywords AI, you can get your API key from the API keys page.

2. Keywords AI Native (OpenTelemetry)

You just need to add thekeywordsai_tracing package to your project and annotate your workflows.

- Python

- JS/TS

Set up Environment Variables

Get your API key from the API Keys page in Settings, then configure it in your environment:

.env

Optional HTTP instrumentation

If you see logs like:install the OpenTelemetry instrumentations to enable and silence these messages:This is optional; tracing works without them. Add only if your app uses

requests or urllib3.3. View your traces

You can now see your traces in the Traces.