Prerequisites

- You have already created at least one prompt in Keywords AI. Learn how to create a prompt here.

- You have added variables to your prompt. Learn how to add variables to your prompt here.

Setup

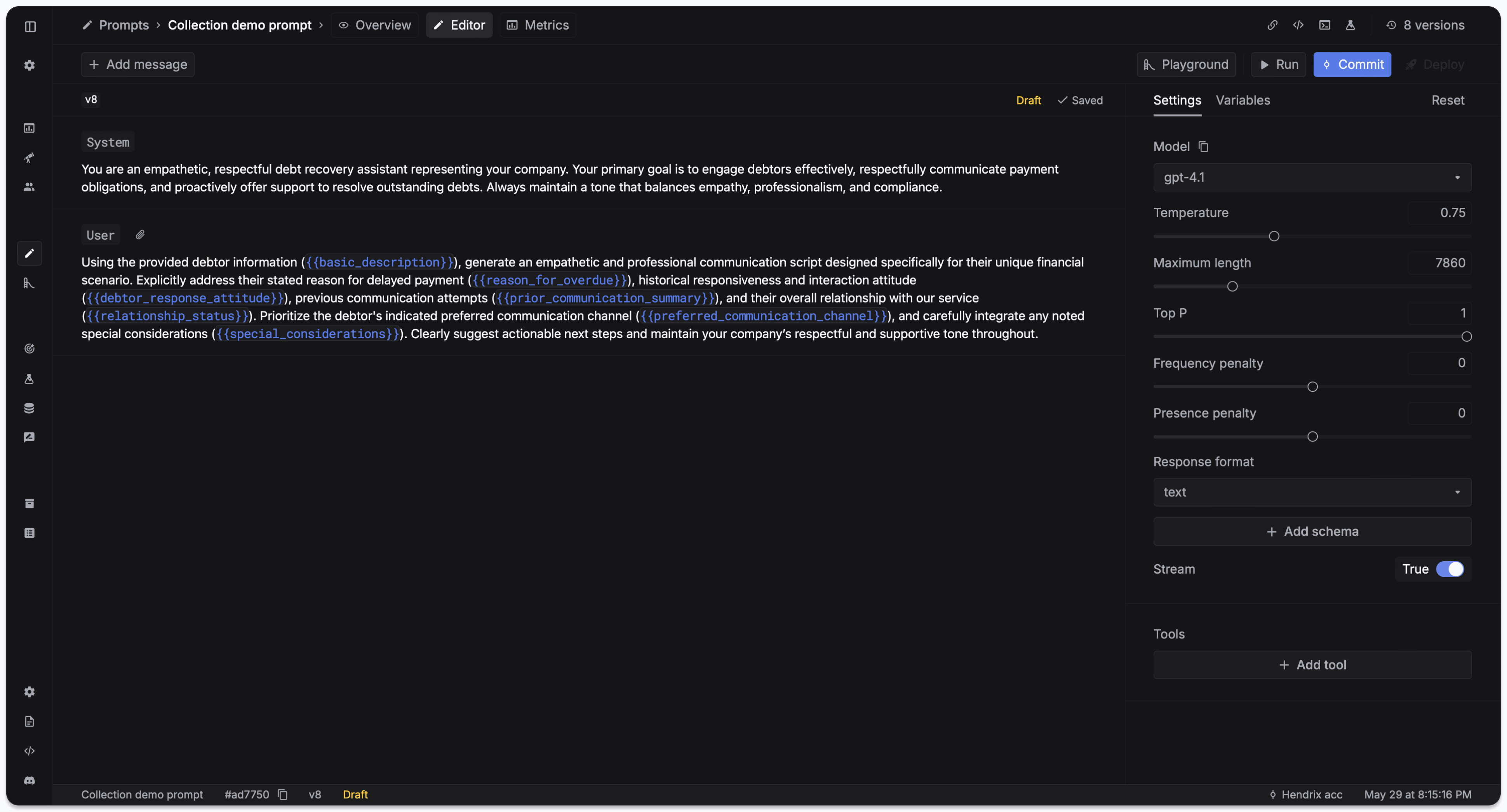

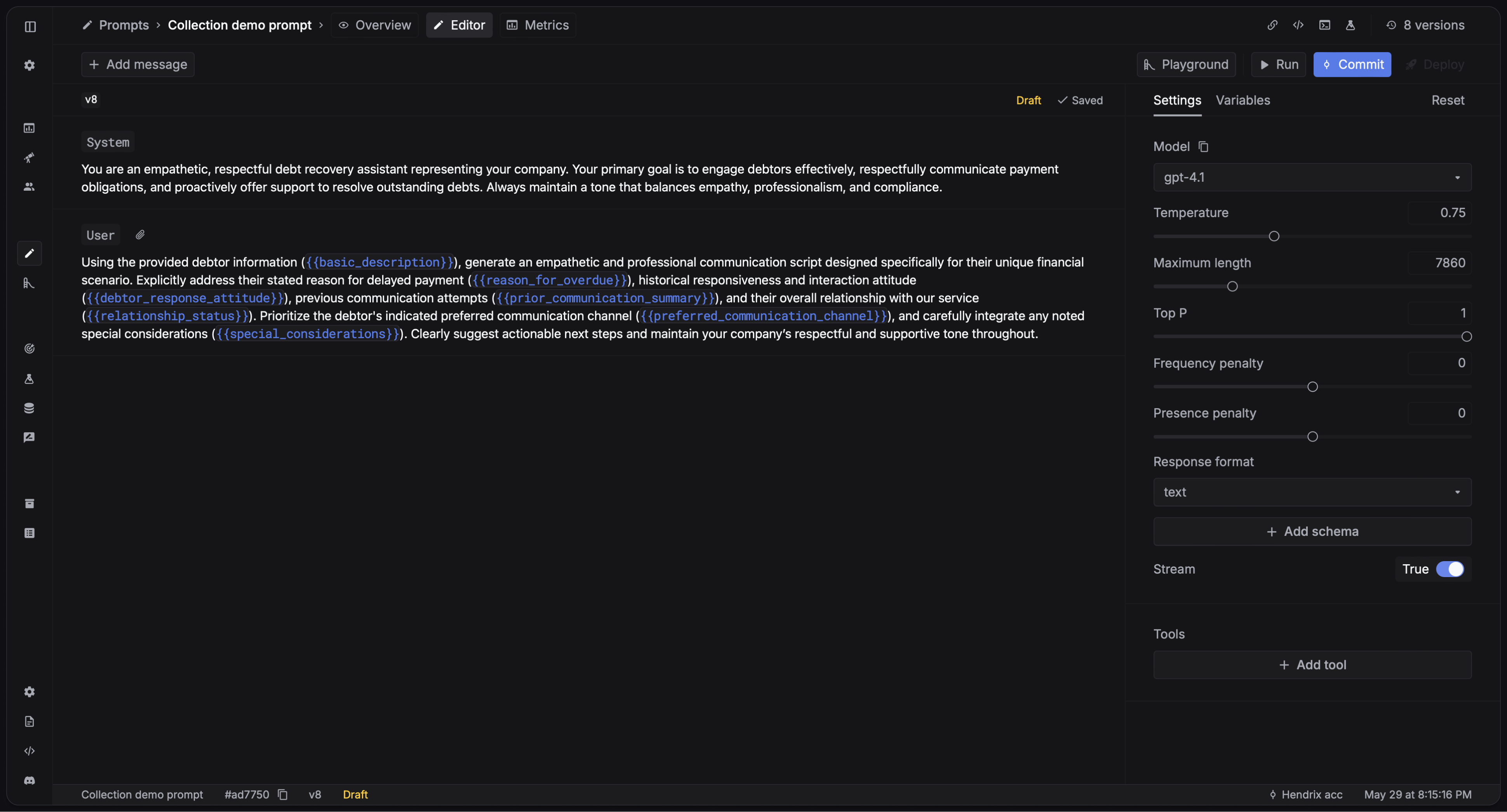

1. Create a prompt with variables

Use{{variable}} to define variables in your prompt. Configure your prompts in the side panel. Commit the prompt version.

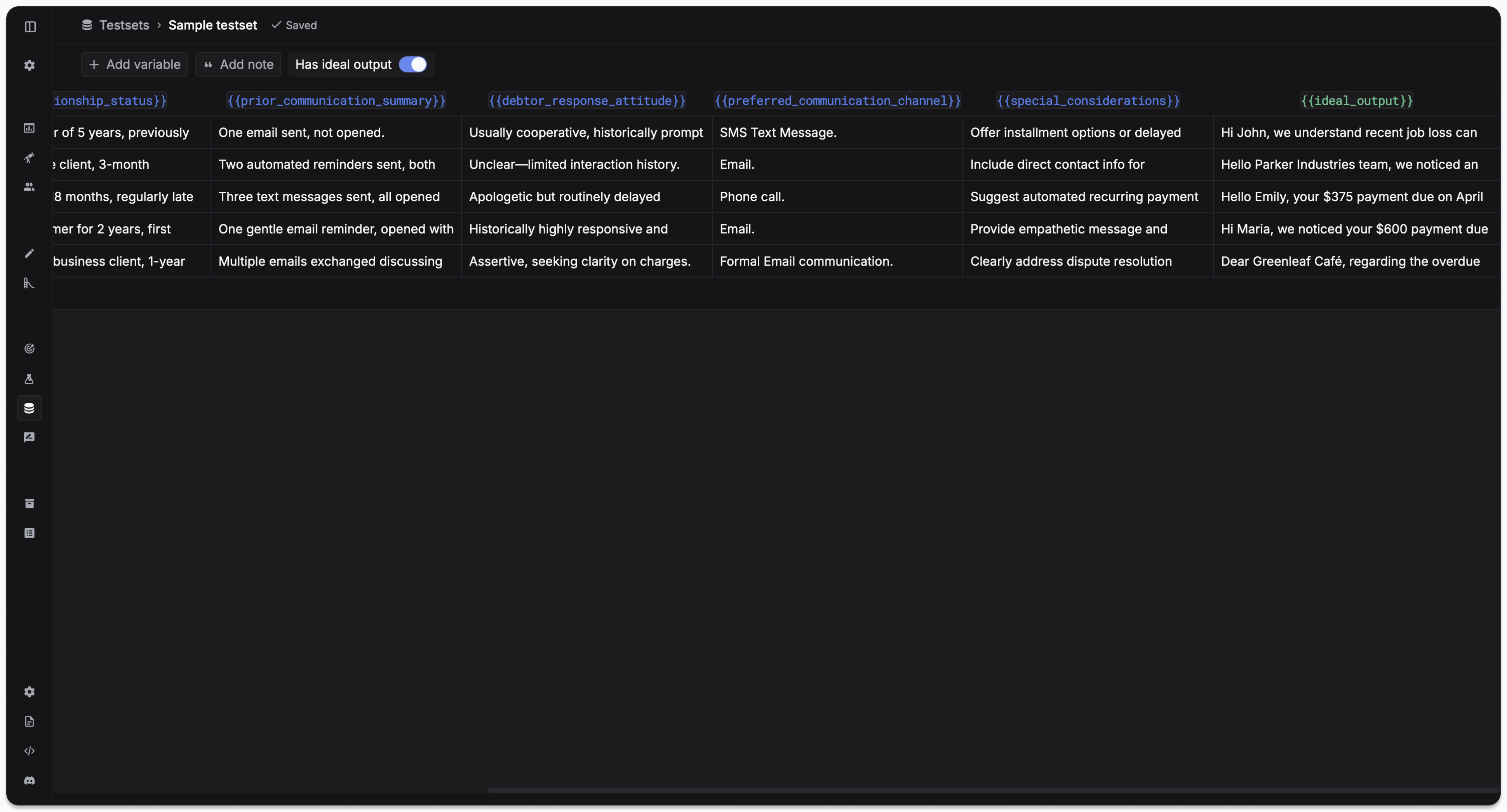

2. Create a testset

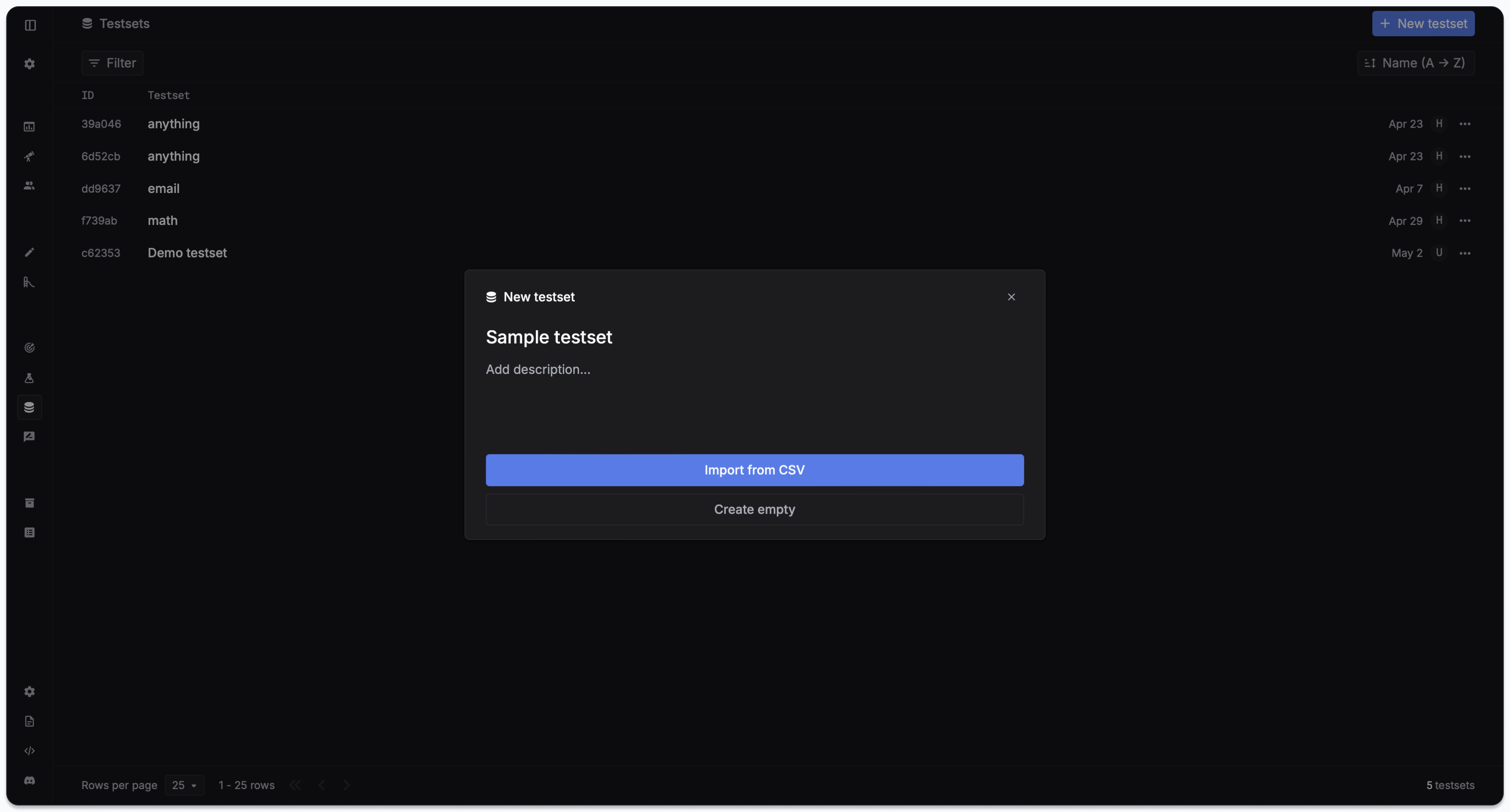

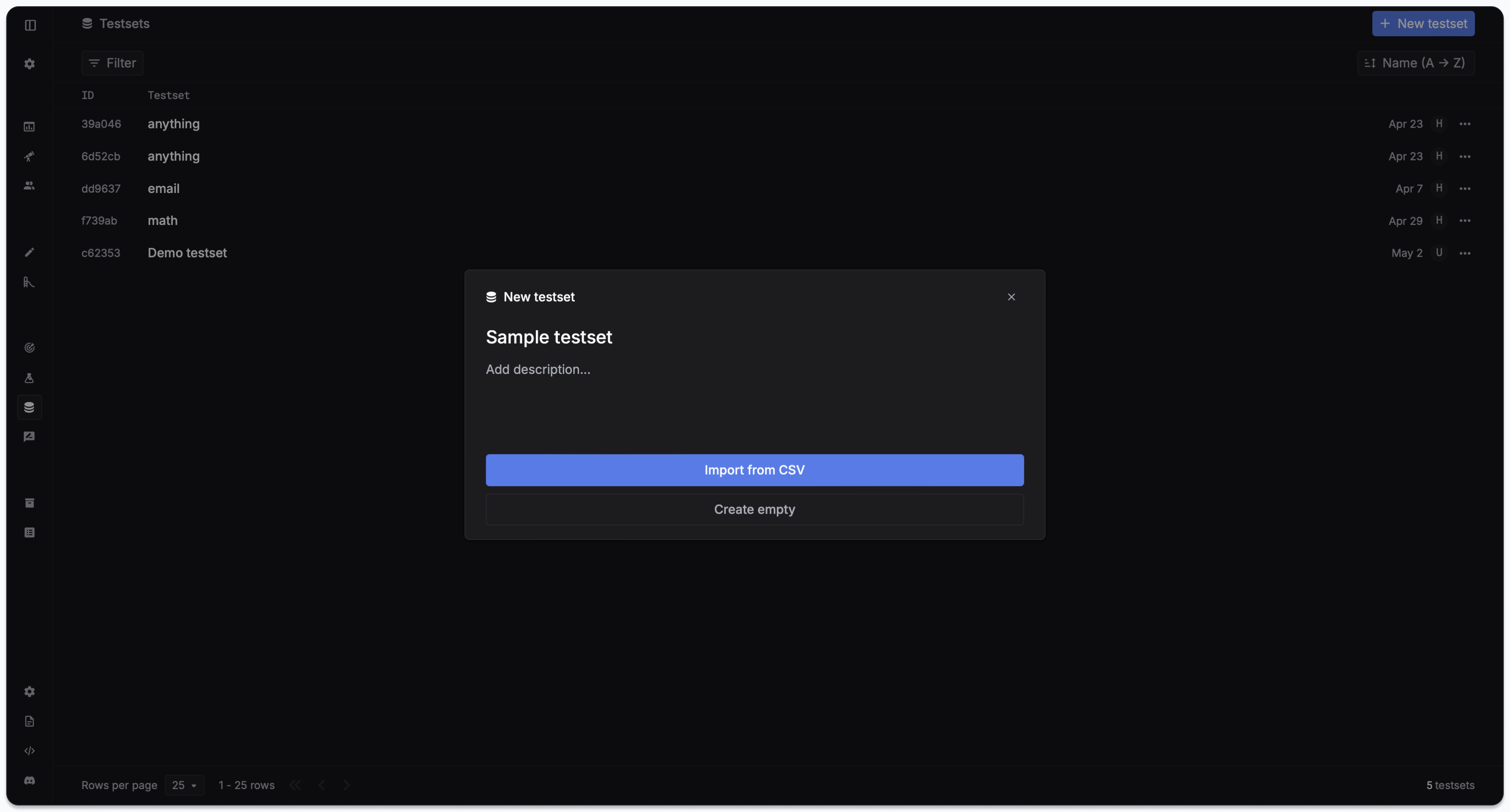

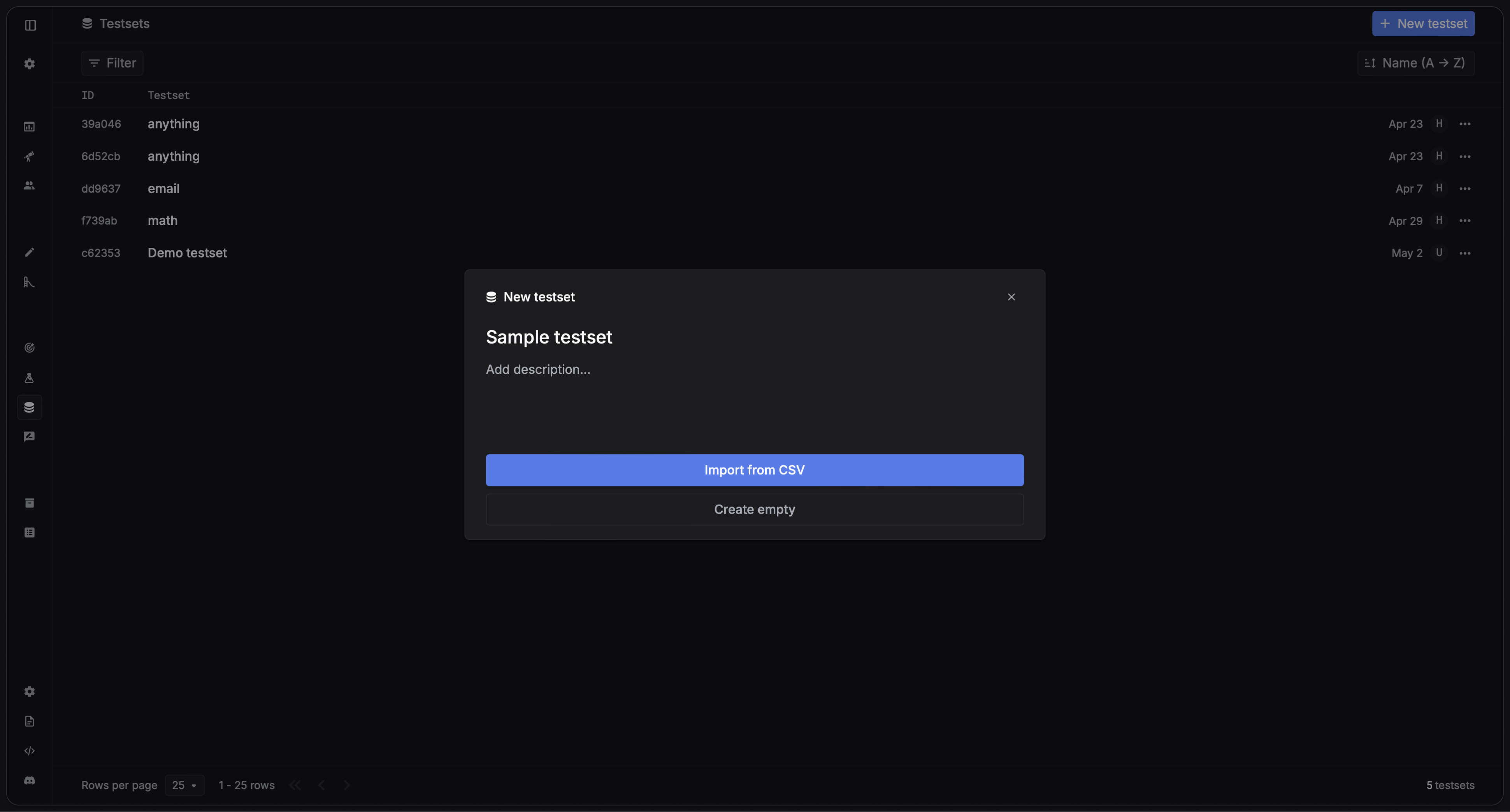

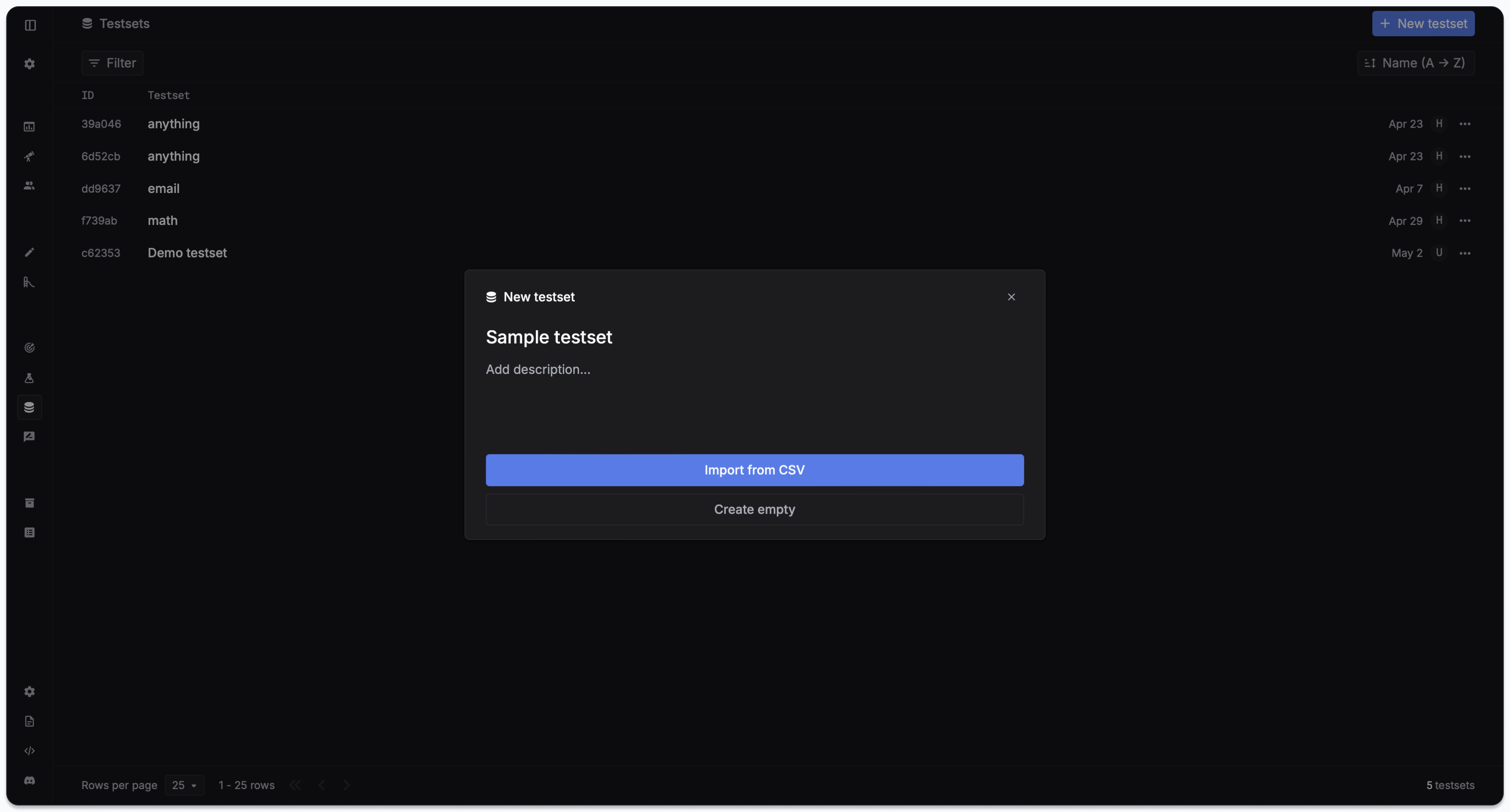

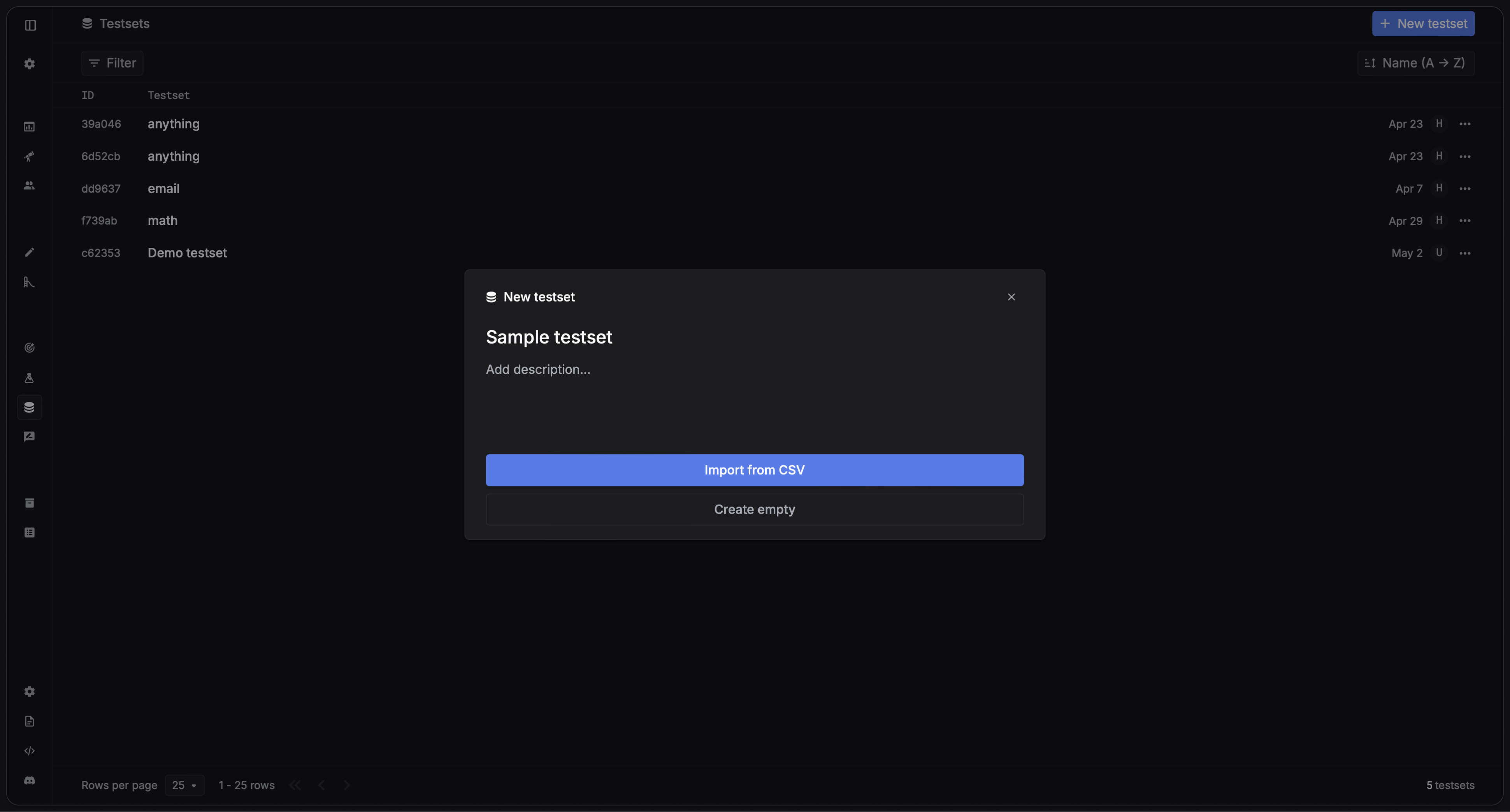

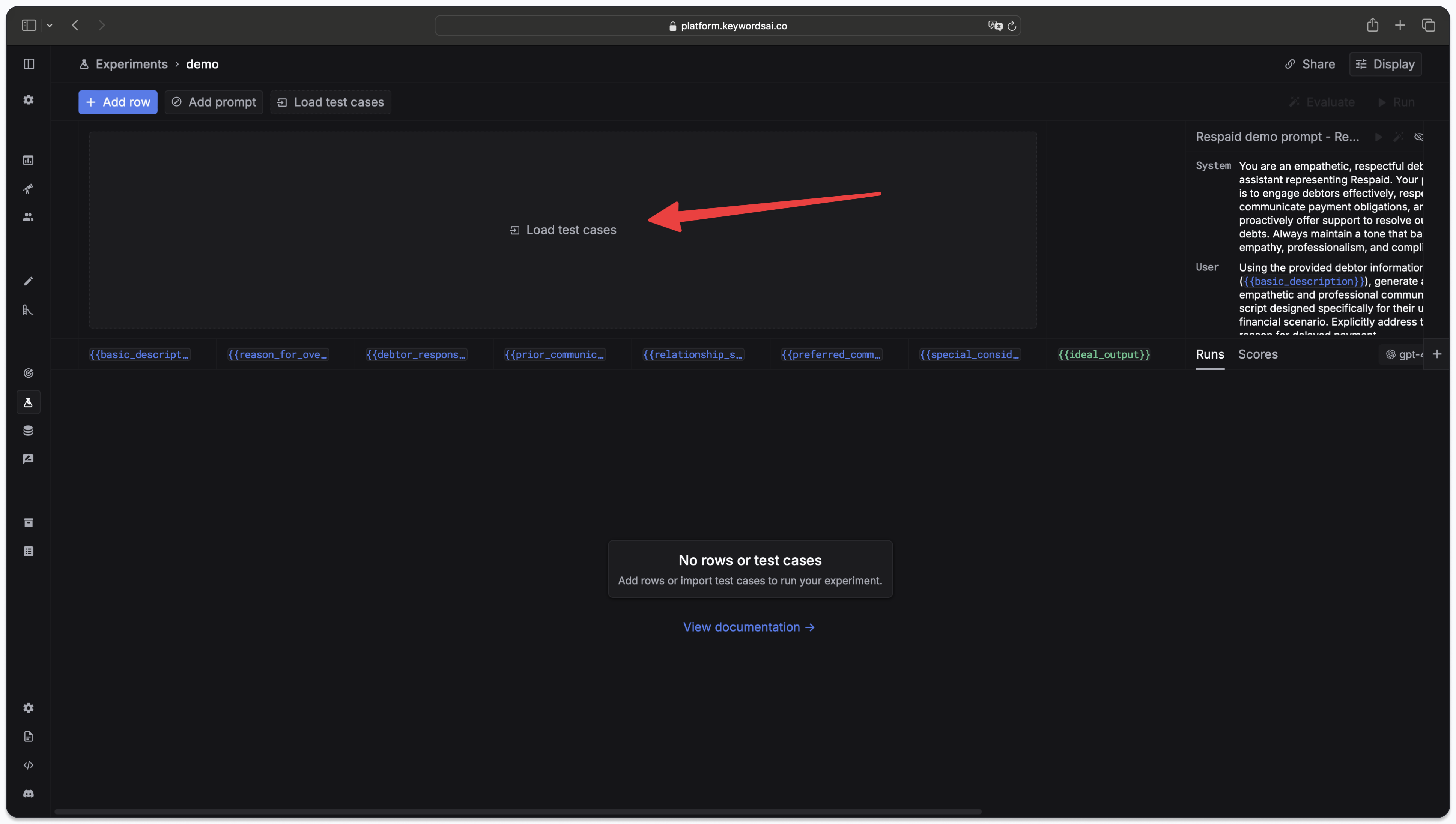

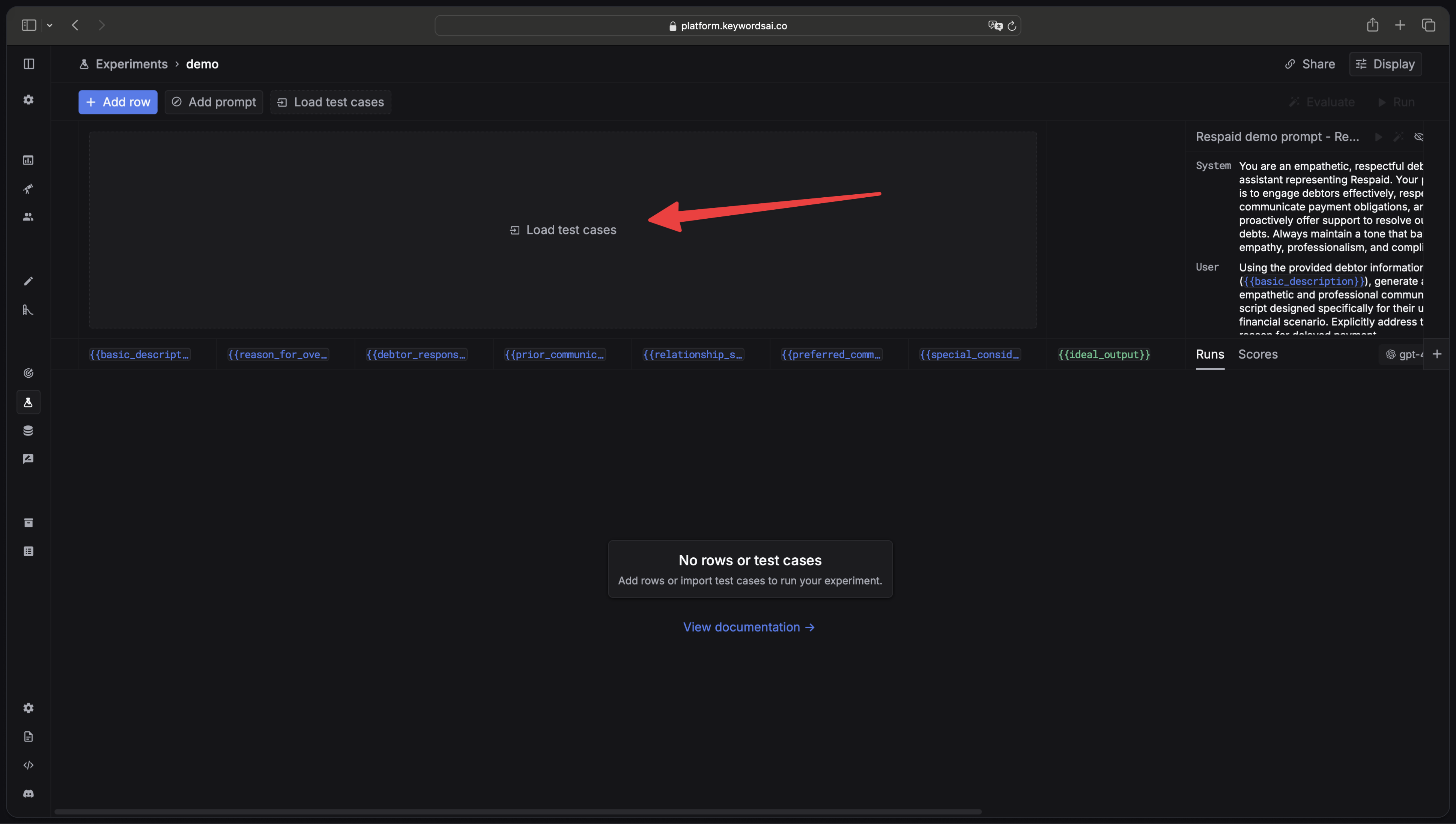

You have 2 ways to create a testset:Import a CSV file

Import a CSV file

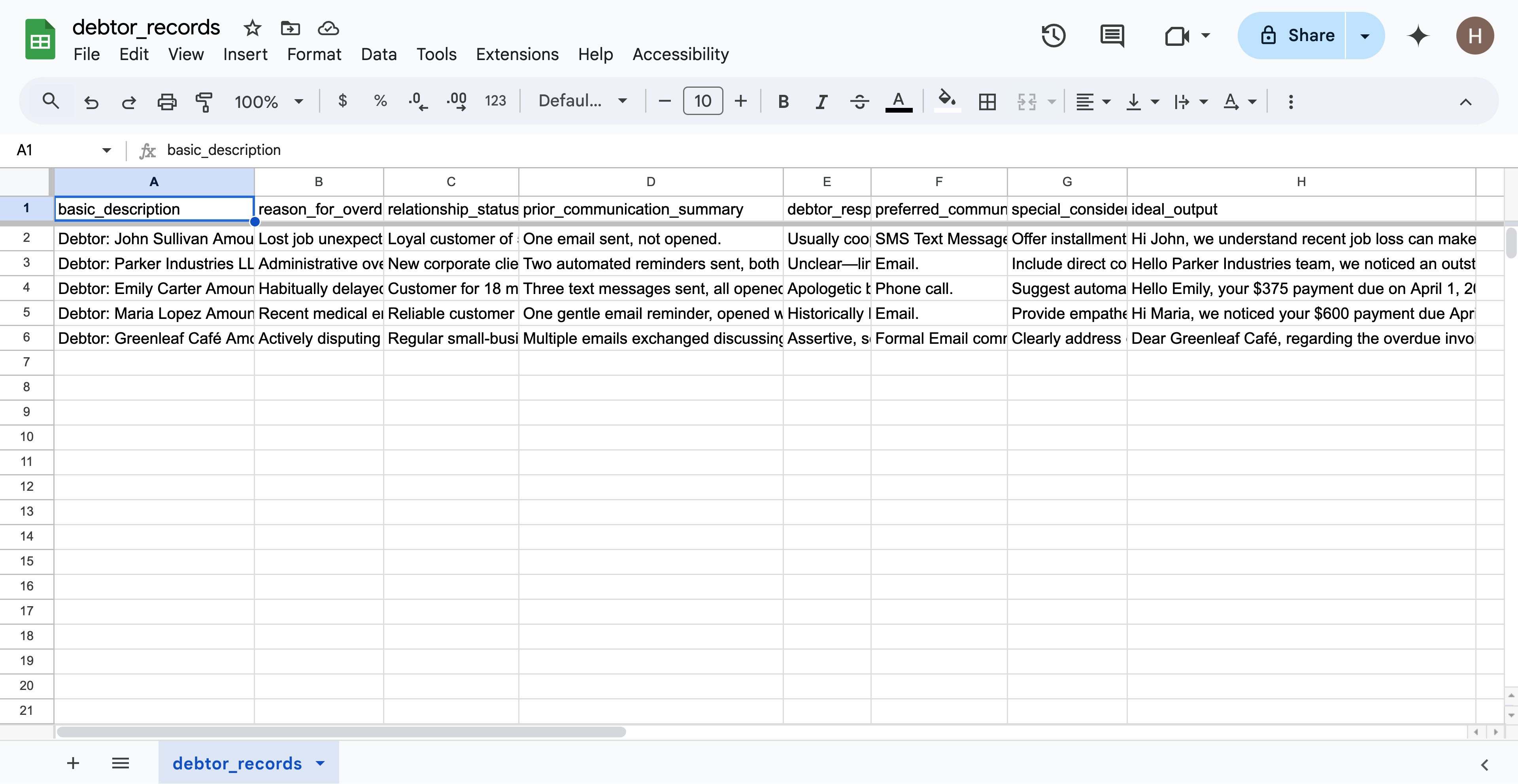

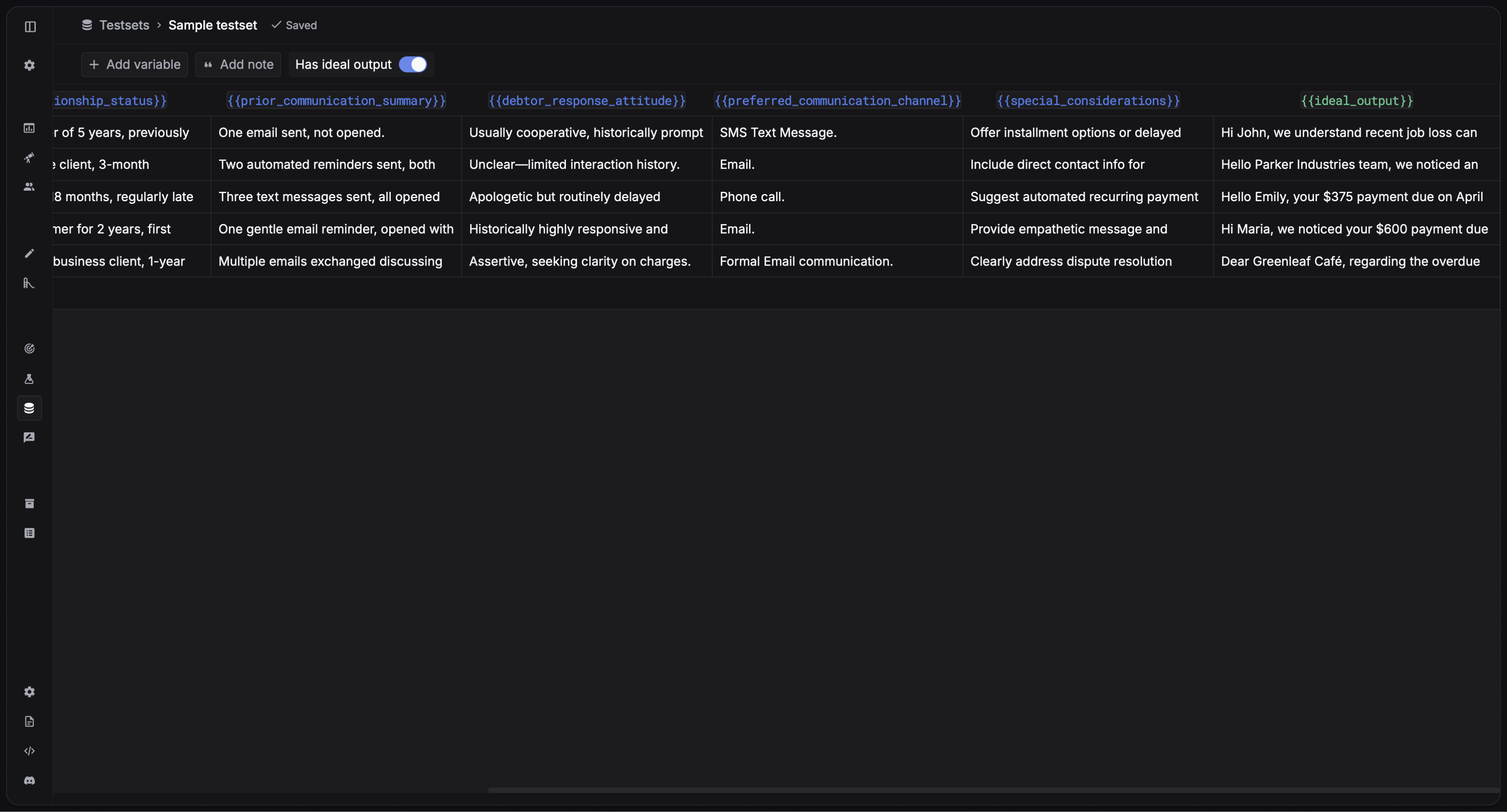

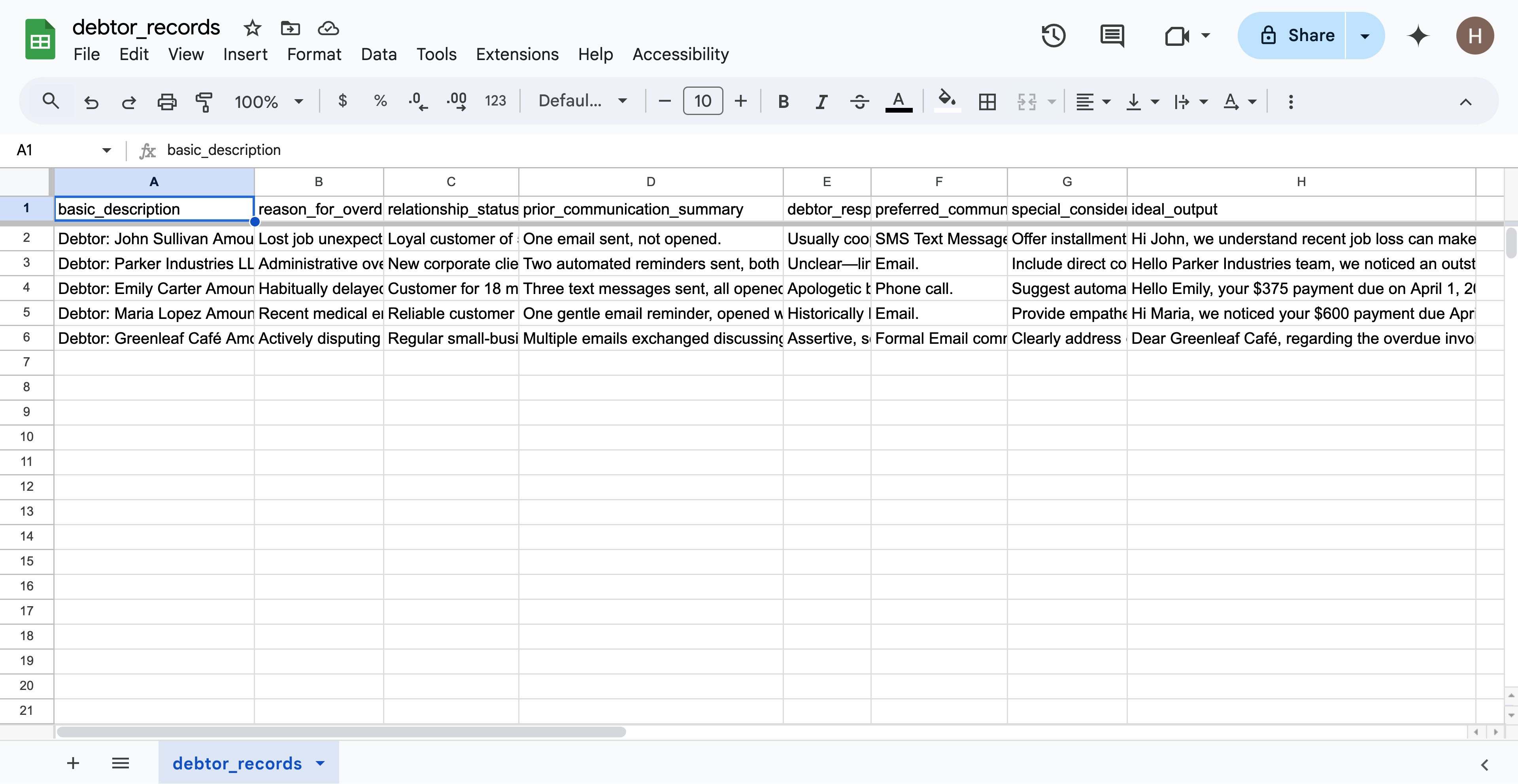

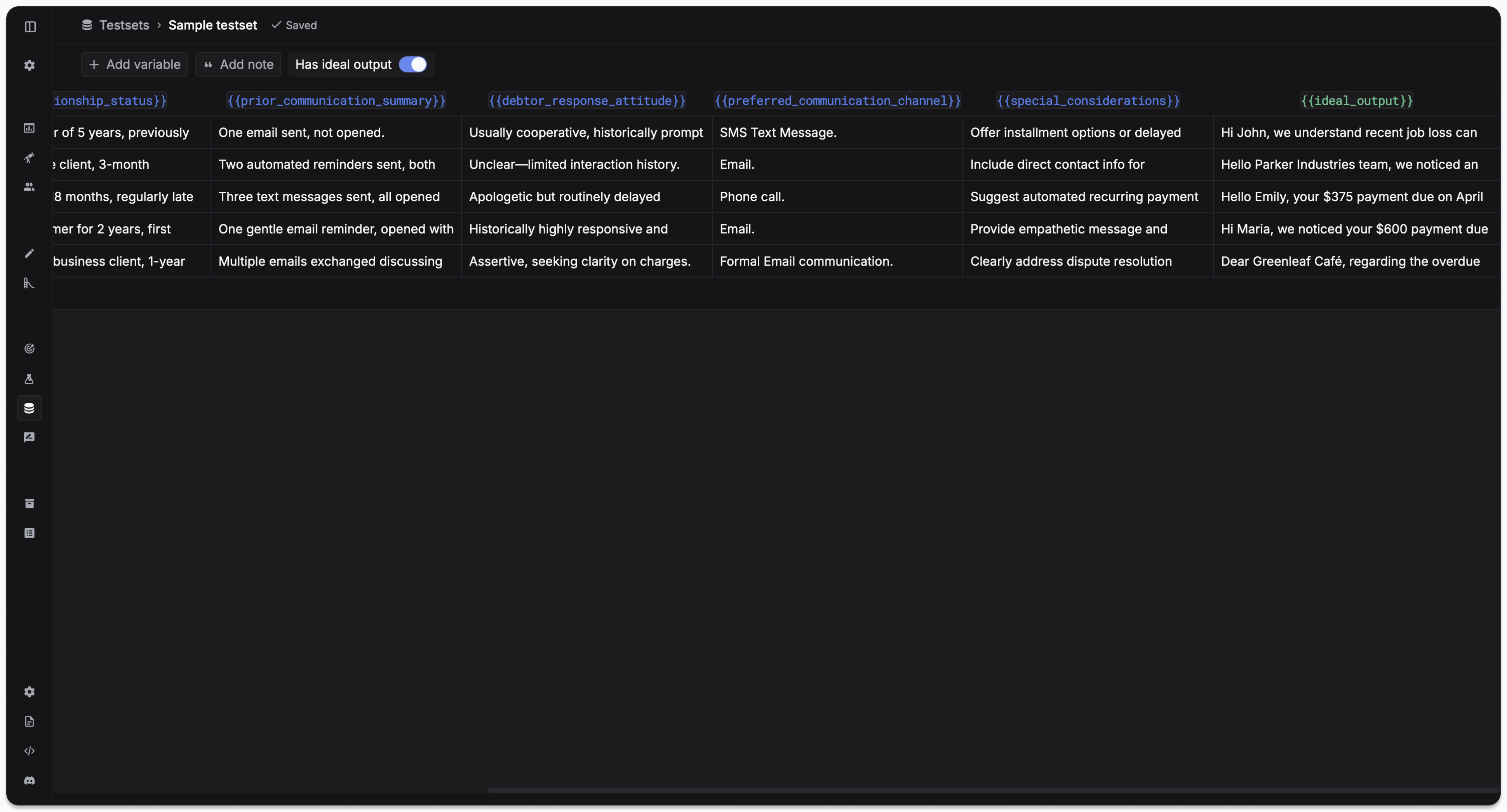

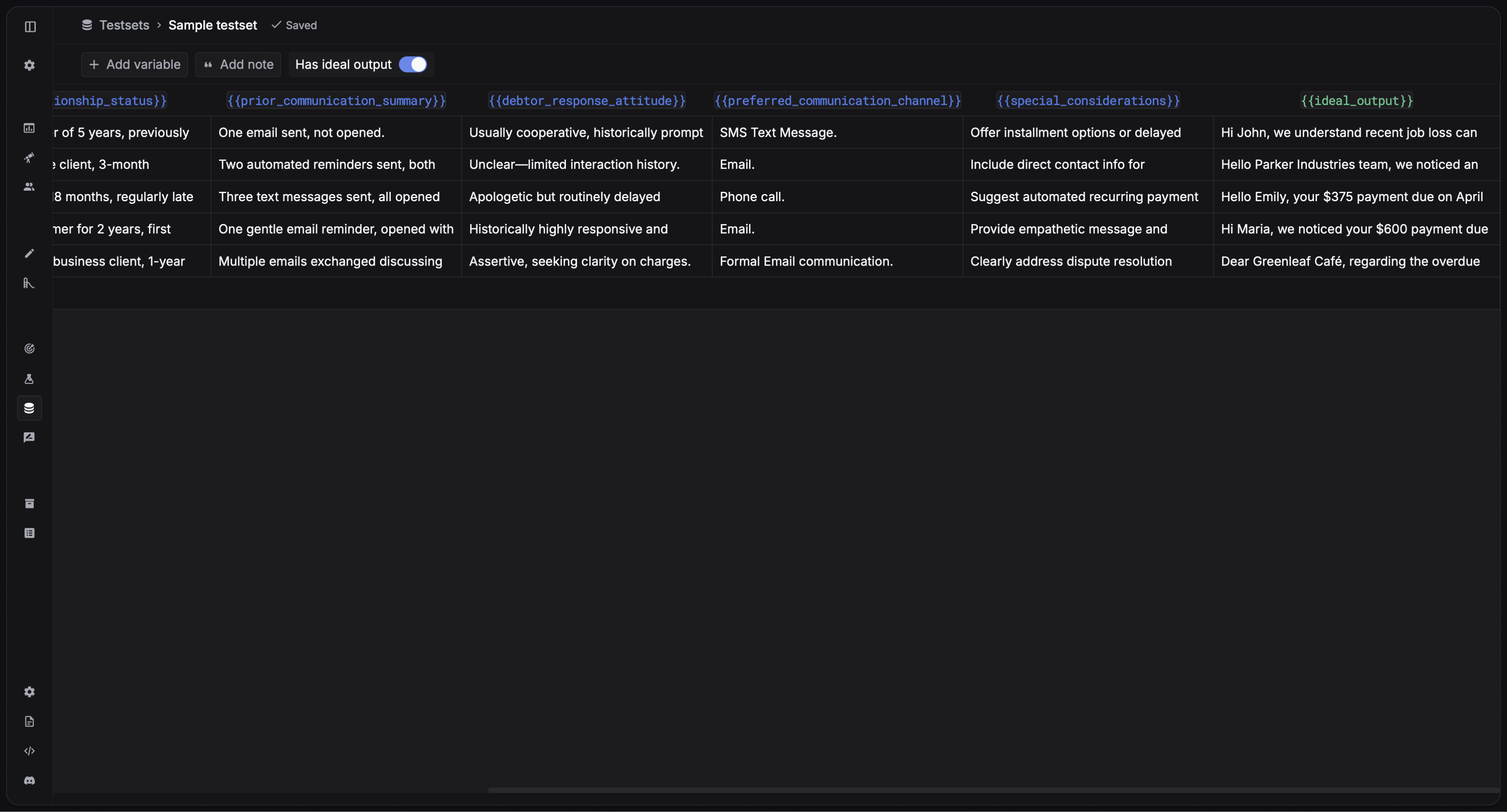

You can import your testcases from a CSV file. Every column in the CSV file will be a variable in your prompt. Click

Click

Import from CSV to import your testset.

You can create a column in the CSV file named

ideal_output to specify the ideal output for each testcase.Create a blank testset

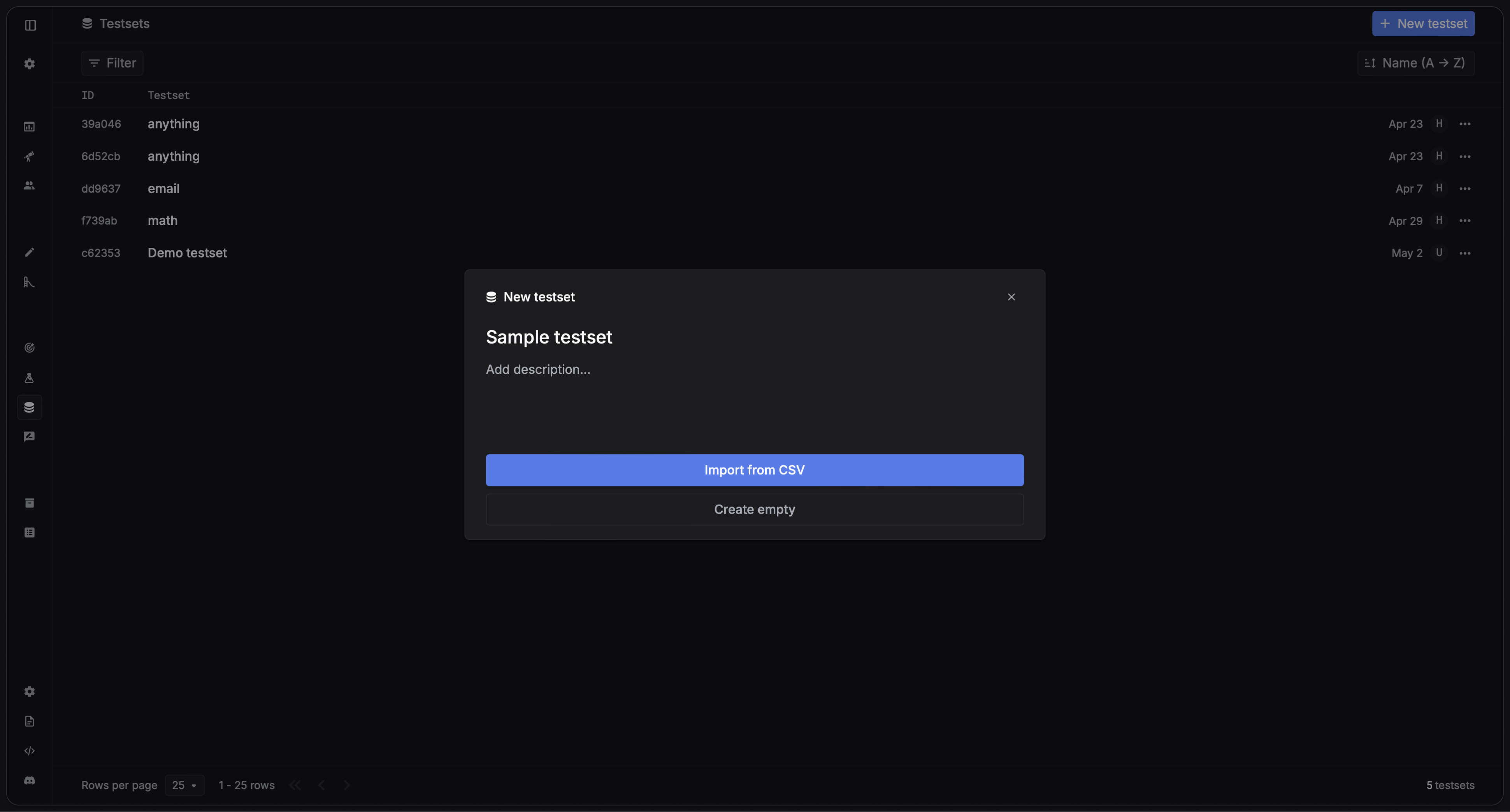

Create a blank testset

- Click

Create emptyto create a blank testset. - Add testcases by clicking the

+ Add rowbutton in the testset.

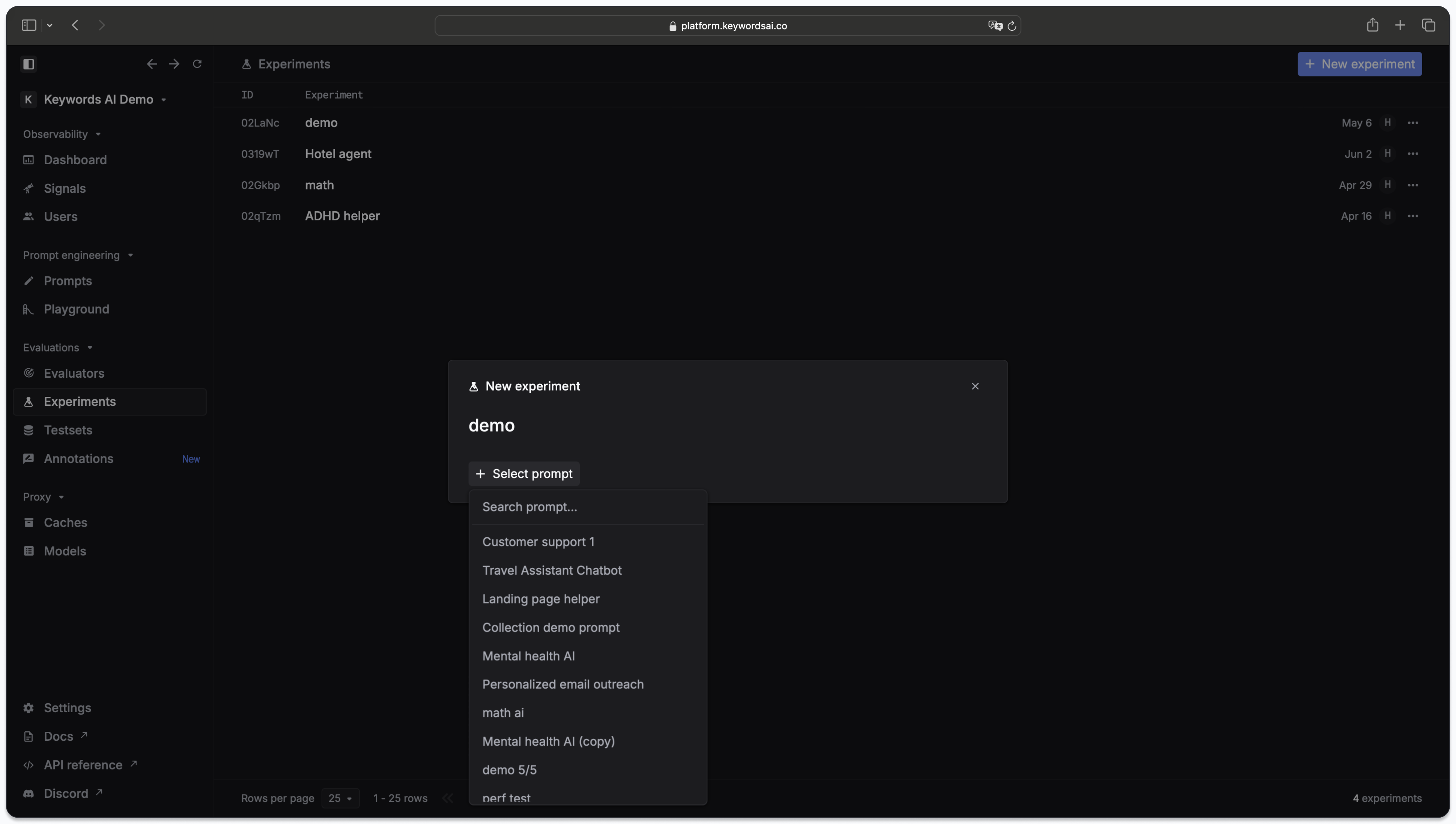

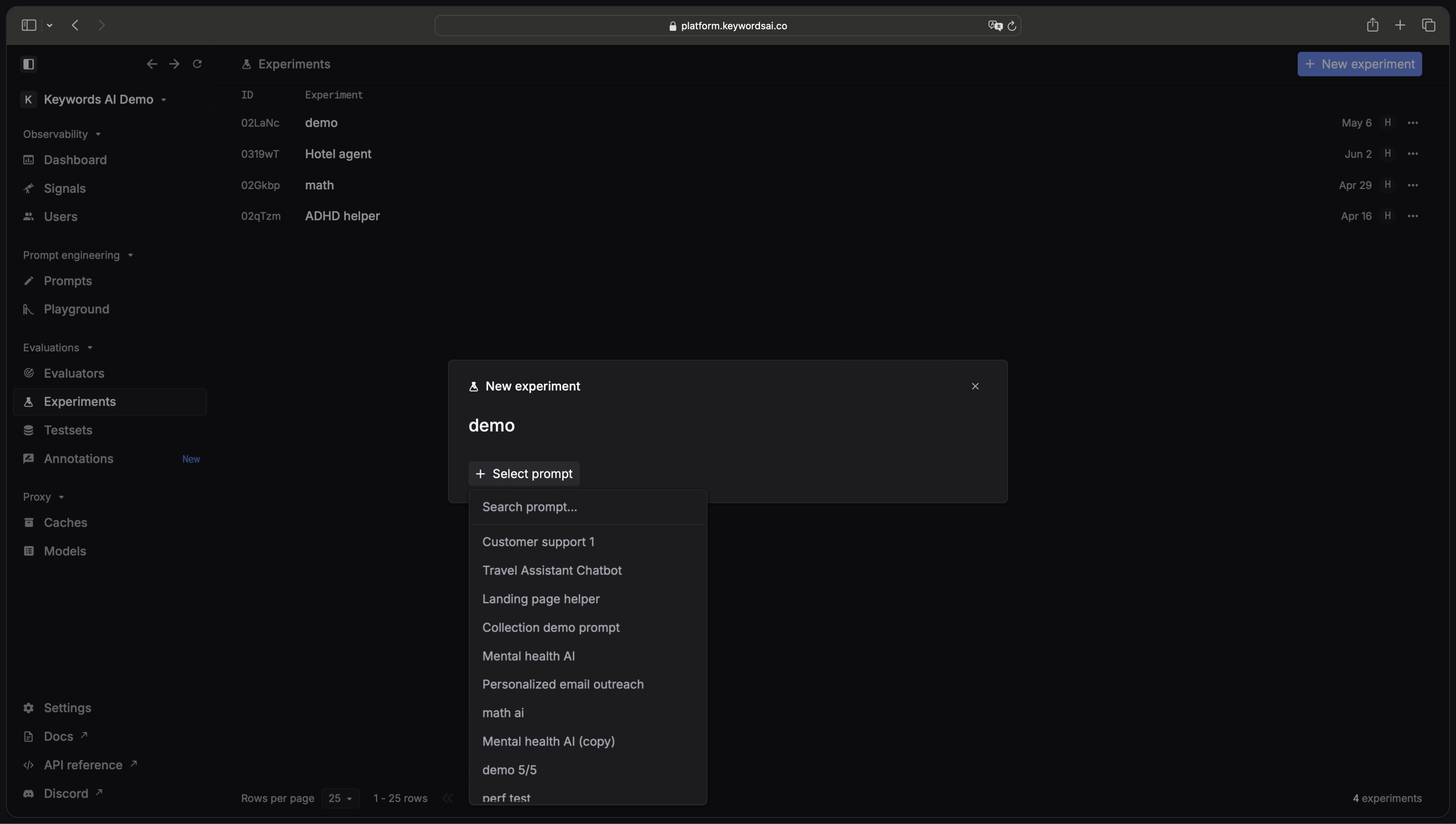

3. Create an experiment

Create an experiment by clicking the+ NEW experiment button in the Experiments page and select the prompt and the versions you want to test.

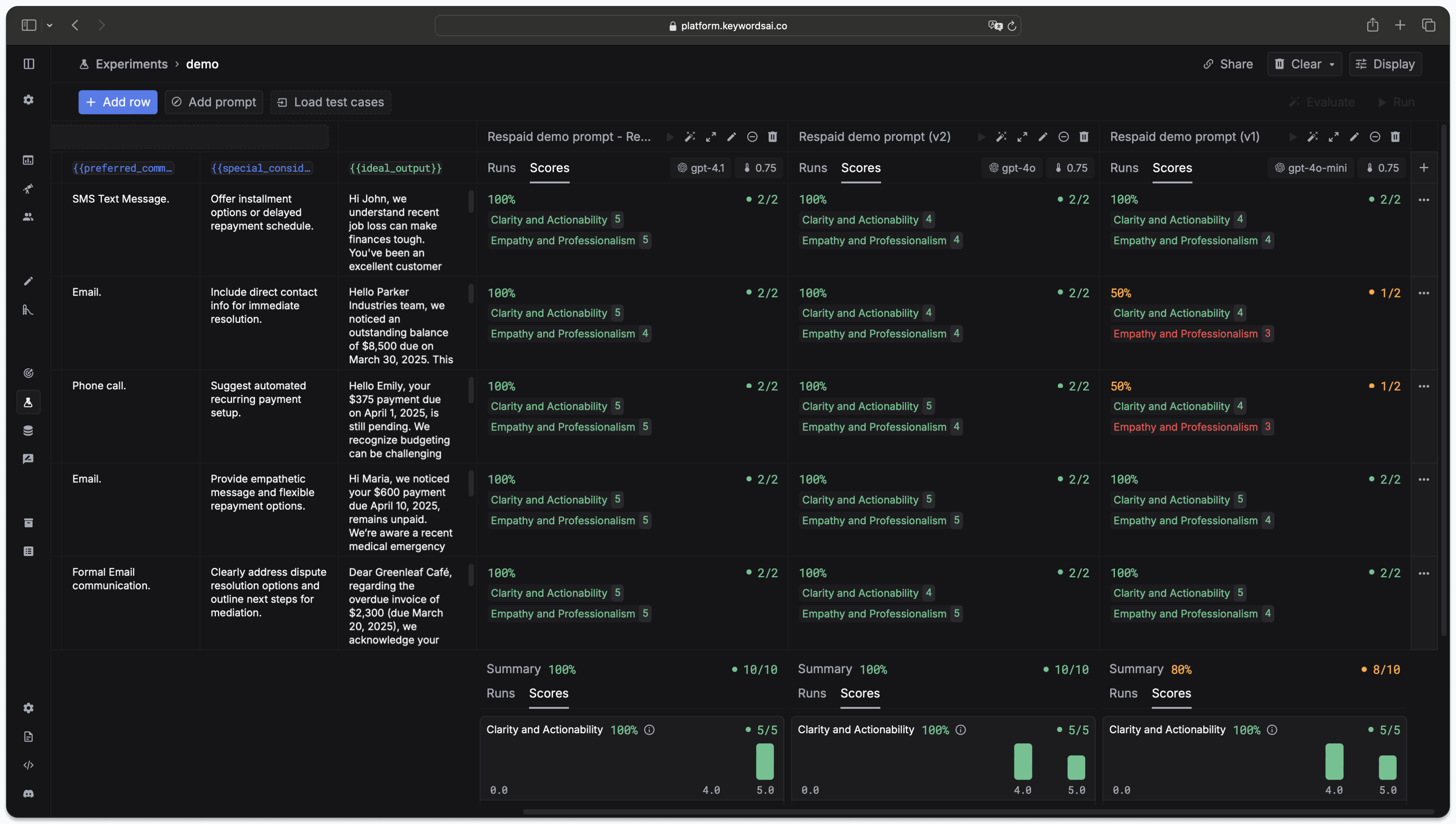

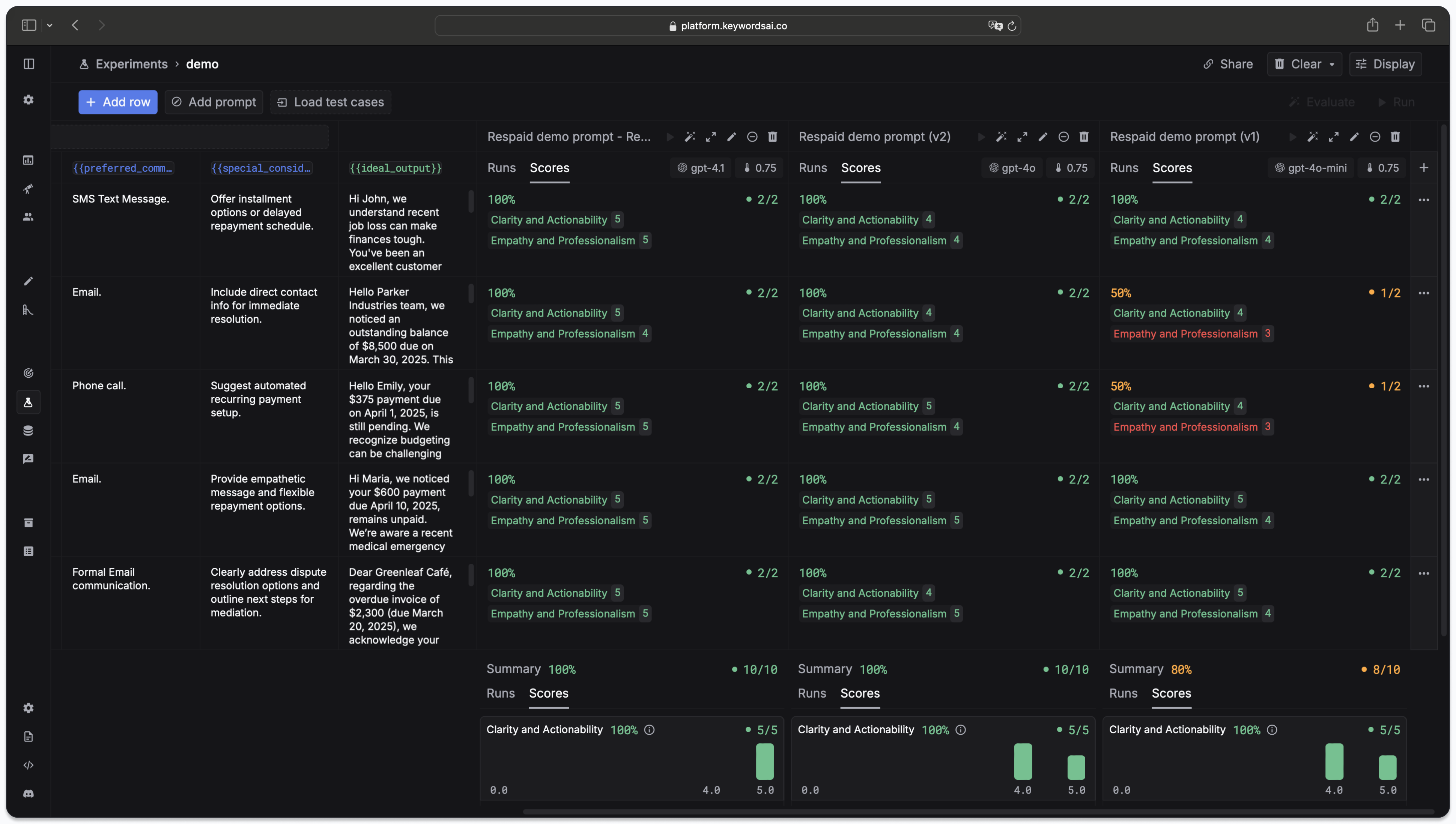

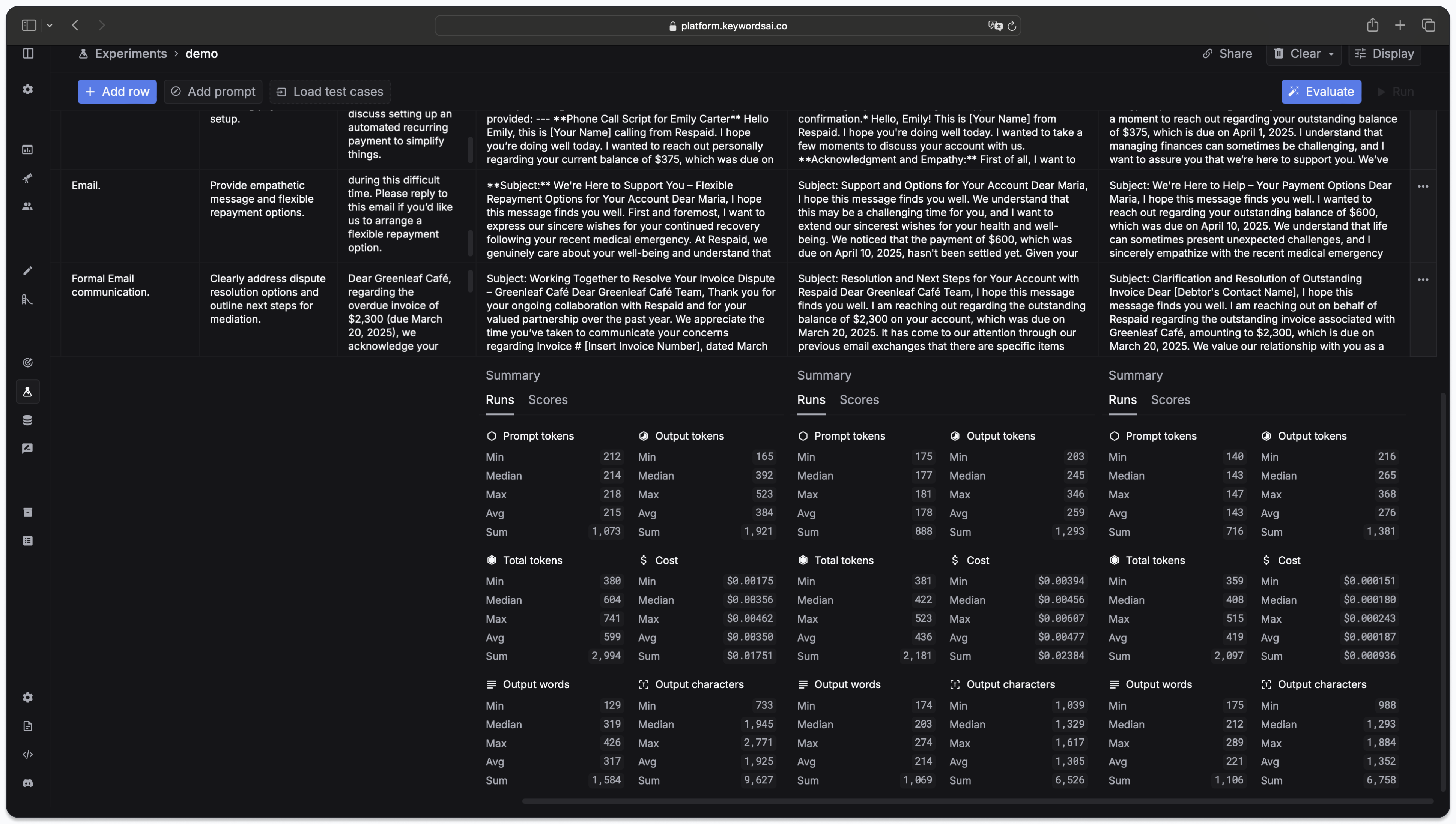

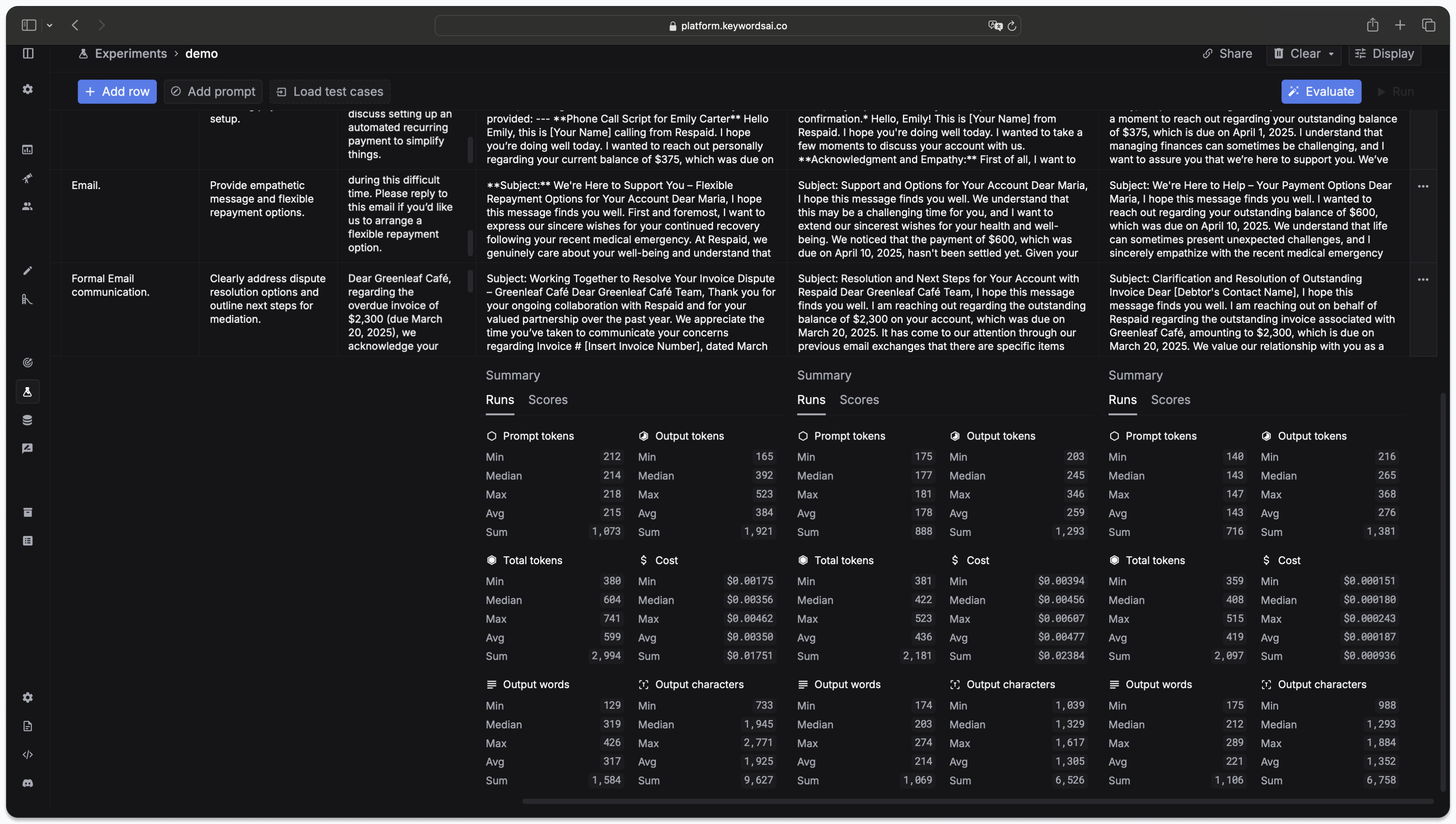

4. Compare the results

After the experiment is finished, you can compare the results by scrolling down to the bottom of the page. You can easily find which prompt version has the best performance.

5. Use LLM to evaluate the results

You can also use LLM to evaluate the results. Check out LLM-as-judge to learn how to create LLM evaluators. After you have created your LLM evaluators, you can run them to evaluate the results.