How to log prompt variables

Via LLM Gateway

If you are using the LLM Gateway, you should first set up your prompts in the code. Then we will log the LLM requests and variables automatically. Example: When you created a prompt like this:

Via Logging ingestion

When you make a request through the Logging ingestion, you can send the prompt variables in theprompt_messages field. Just simply wrap your prompt variables in pairs of {{}}.

Example:

Why you should log prompt variables

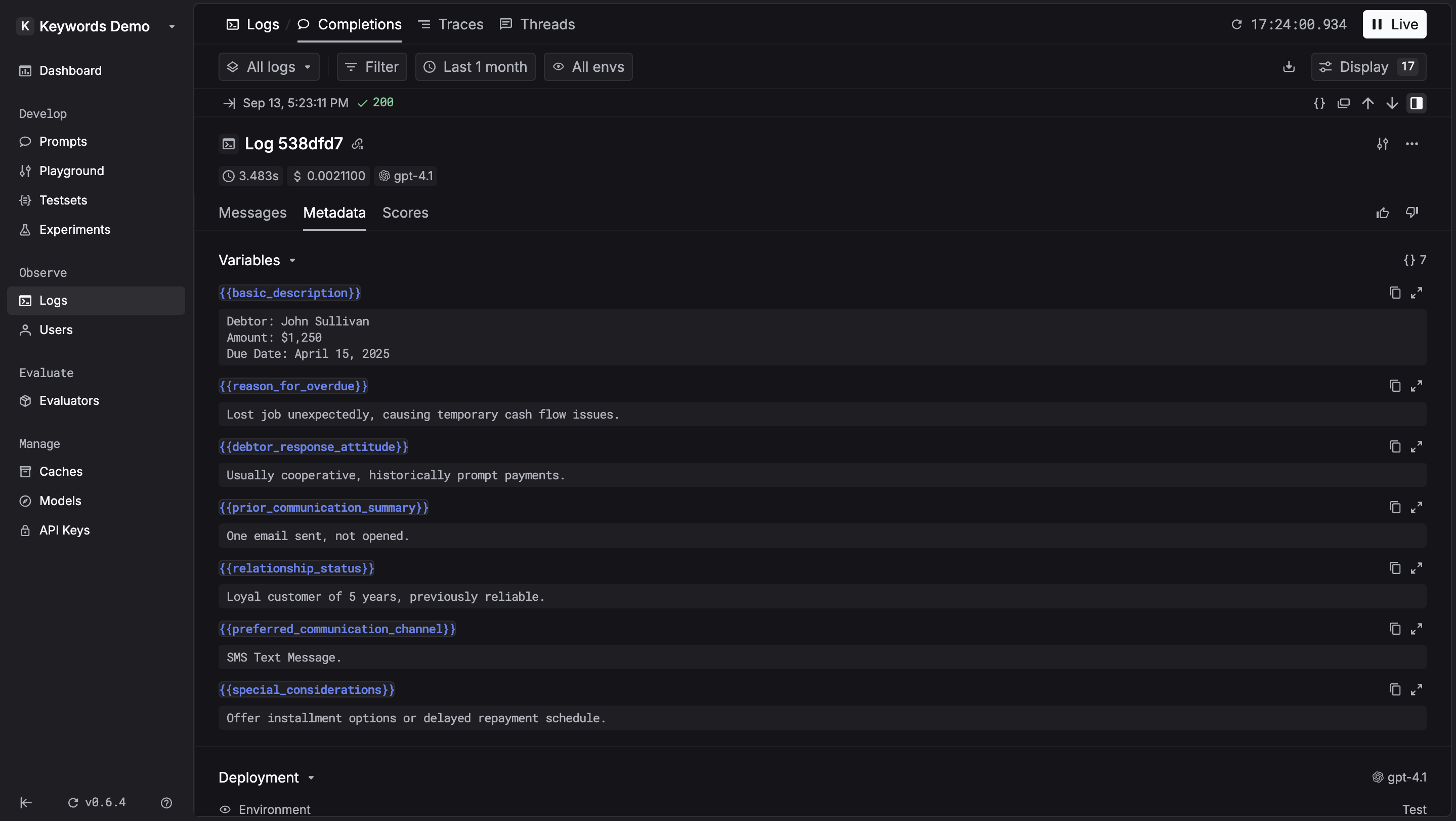

Easy to read: Logged prompt variables appear in the side panel, so you can quickly inspect the content of each variable without digging into the full prompt.

Easy to add to a testset: Logged variables make it simple to turn real-world logs into test cases. With one click, you can add them to a testset and start iterating on your prompts with realistic data.