This section is for Keywords AI LLM gateway users.

Prerequisites

- A Keywords AI API key

- An OpenAI API key (BYOK)

Supported SDKs / integrations

✅ Supported Frameworks

✅ Supported Frameworks

❌ Unsupported Frameworks

❌ Unsupported Frameworks

Configuration

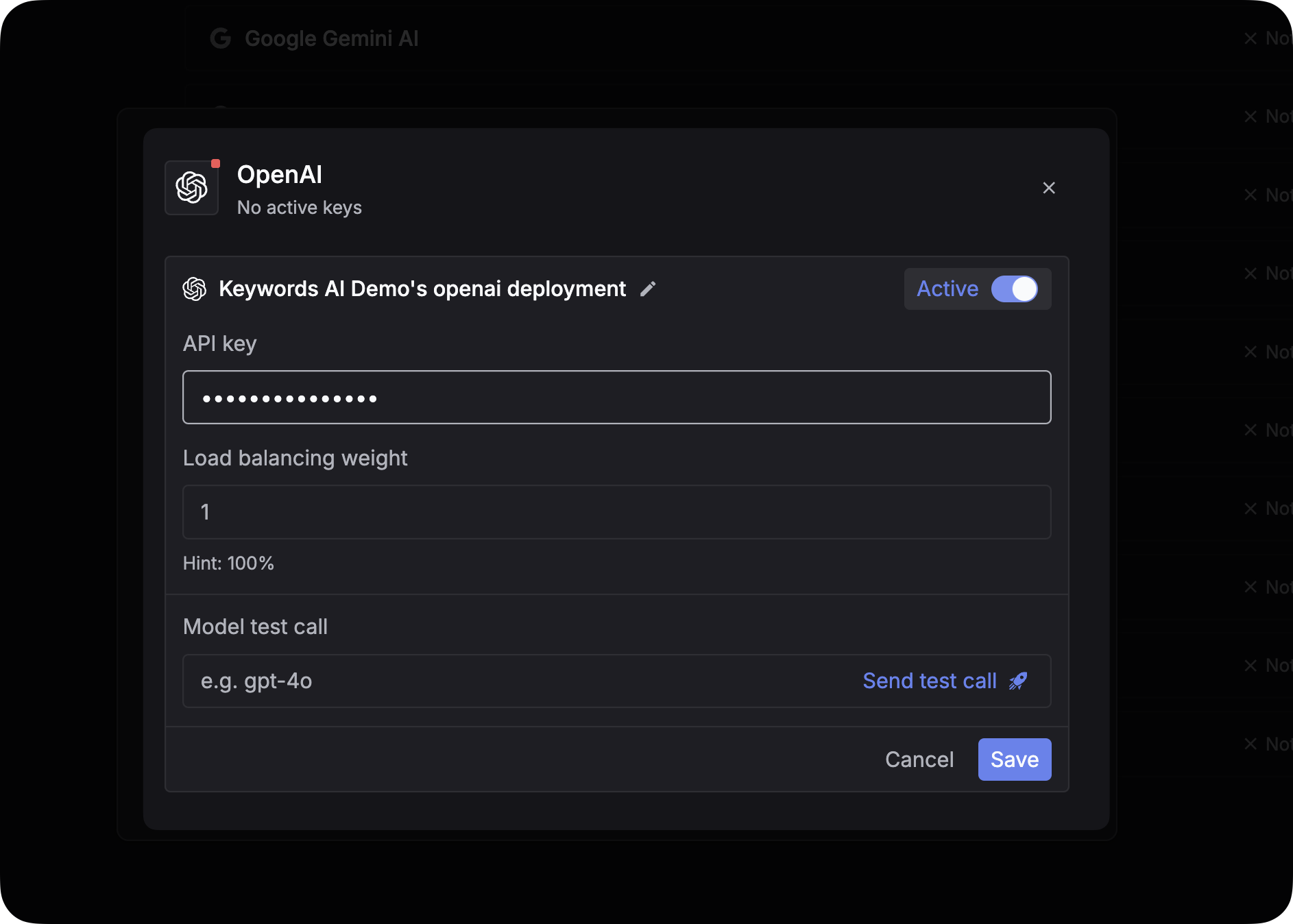

There are 2 ways to add your OpenAI credentials to your requests:Via UI (Global)

Navigate to Providers

Go to the Providers page. This page allows you to manage credentials for over 20+ supported providers.

Via code (Per-Request)

You can pass credentials dynamically in the request body. This is useful if you need to use your users’ own API keys (BYOK). Add thecustomer_credentials parameter to your Gateway request:

Log OpenAI requests

If you are not using the Gateway to proxy requests, you can still log your OpenAI requests to Keywords AI asynchronously. This allows you to track cost, latency, and performance metrics for external calls.OpenAI Python SDK

Get Started with Logging

View the full guide on setting up comprehensive logging for your LLM stack.