This integration is for the Keywords AI gateway. For workflow tracing, see Haystack Tracing.

Overview

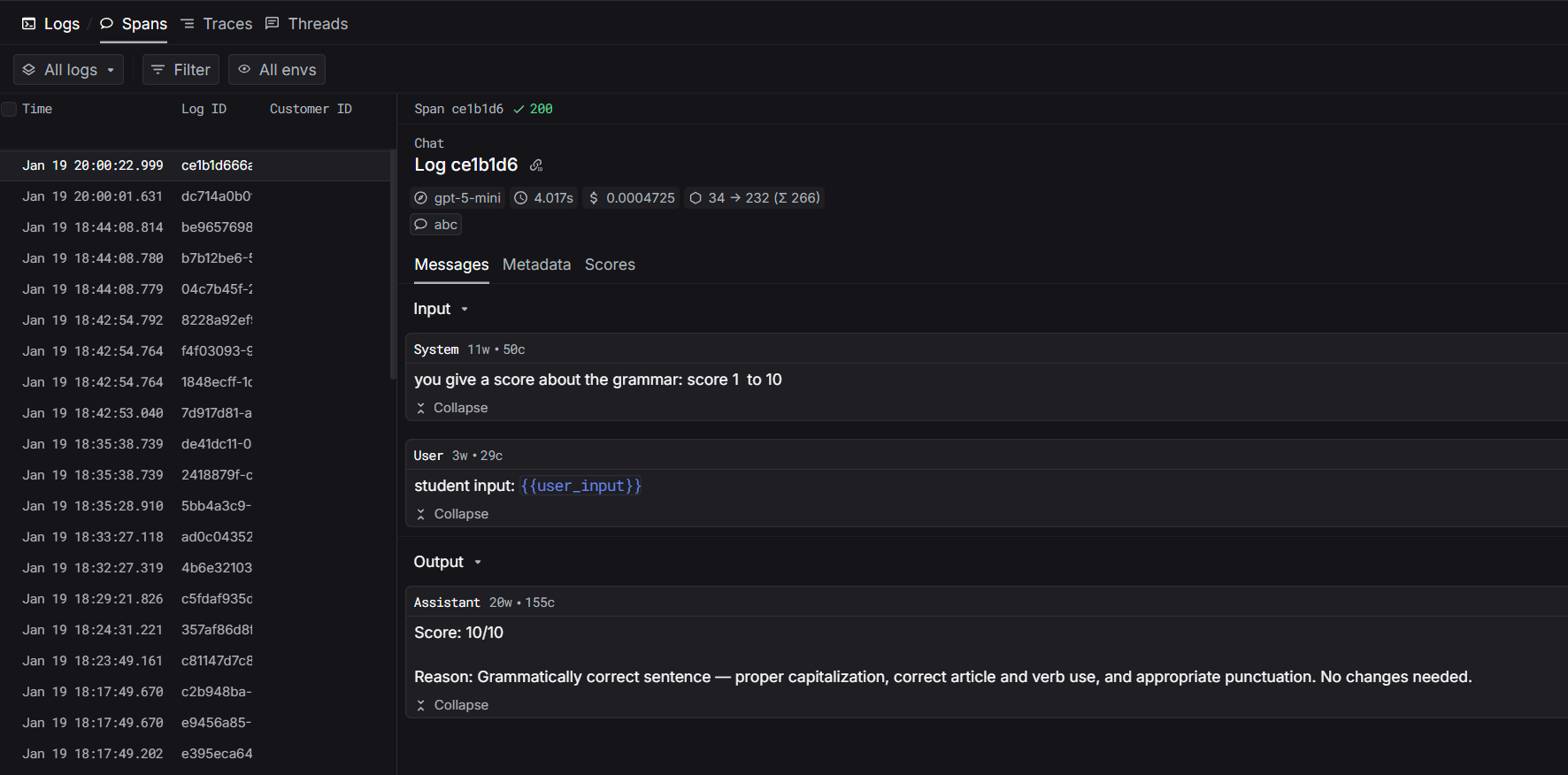

Haystack is an open-source framework for building LLM applications with composable pipelines. The Keywords AI gateway integration routes your LLM calls through Keywords AI for automatic logging, fallbacks, load balancing, and cost optimization.

Installation

Quickstart

Step 1: Set Environment Variables

Step 2: Replace OpenAIGenerator with KeywordsAIGenerator

Prompt Management

Use platform-managed prompts for centralized control:- Update prompts without code changes

- Model configuration managed on platform

- Version control & rollback

- A/B testing

Create prompts at: platform.keywordsai.co/platform/prompts

Supported Parameters

OpenAI Parameters

All OpenAI parameters are supported:Keywords AI Parameters

Use Keywords AI parameters for advanced features:Workflow Tracing

For complete visibility of your pipeline execution, add workflow tracing to see how data flows through each component.Haystack Tracing Integration

Learn how to trace your entire Haystack pipeline